둘러보기

Unity Shader 노트

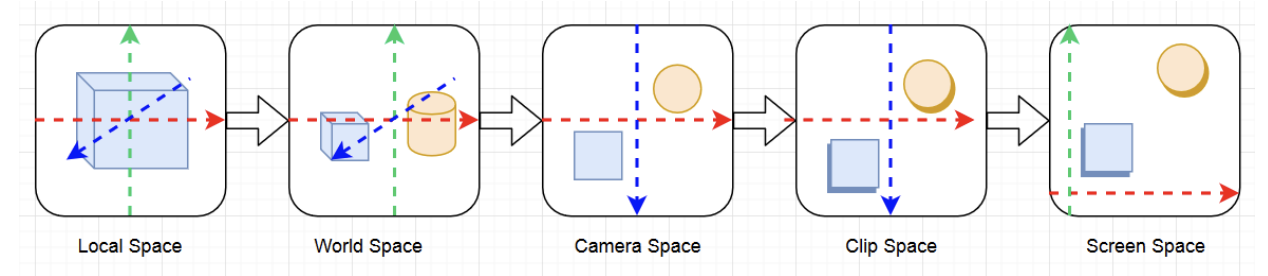

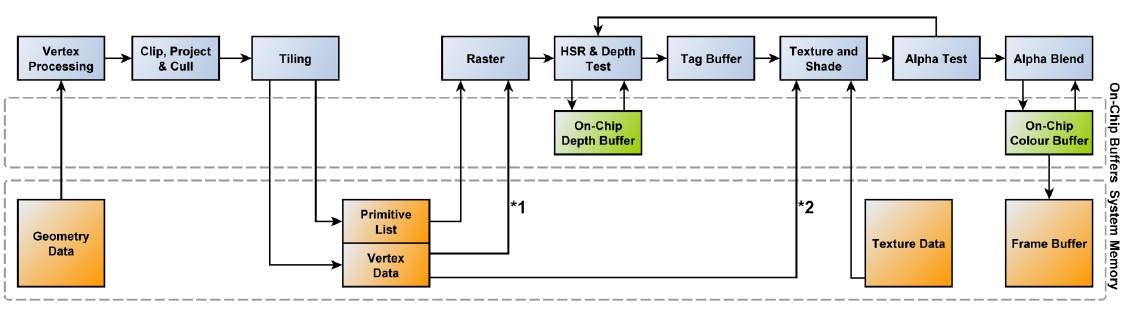

Pipeline

텍스쳐

메쉬 VertexBuffer pos/uv/normal/color IndexBuffer

Gamma / Linear Pipeline (HDR)

Rendering Forward Forward Plus Deferred

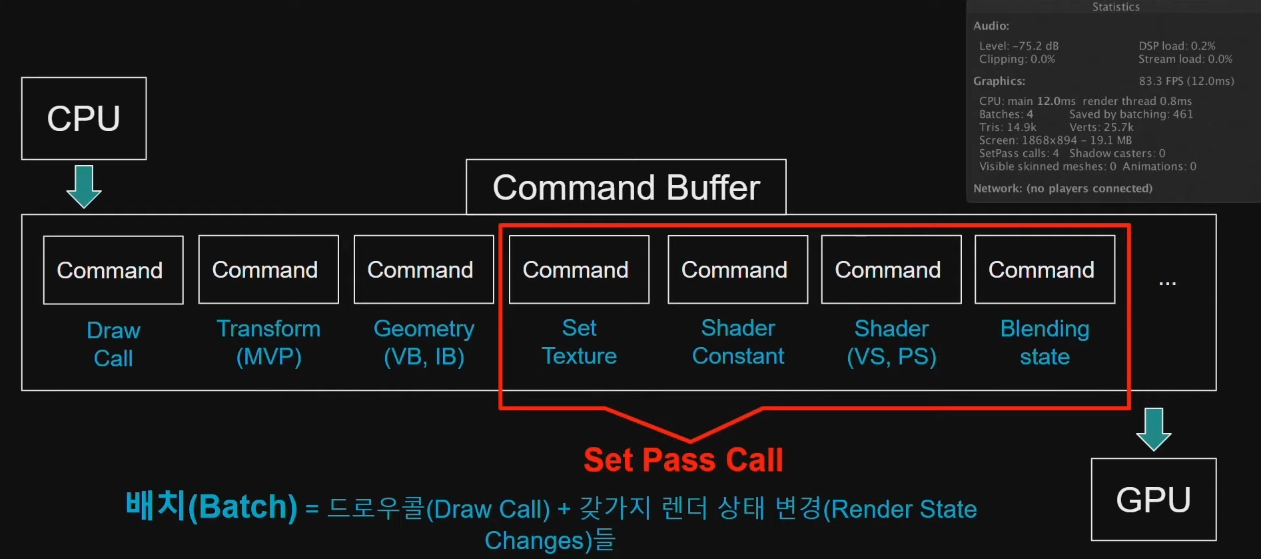

CPU =DrawCall=> GPU

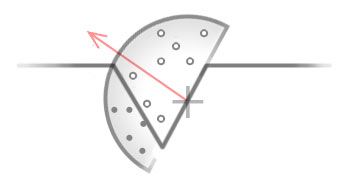

Culling

Input Assembly Shader:Vertex object/world/camera/clip Shader:Hull Shader:Tesselator Shader:Domain Shader:Geometry

Rasterizer

정점간 보간

Early Depth Testing

Shader:Pixel

Depth Testing

Render Target Output

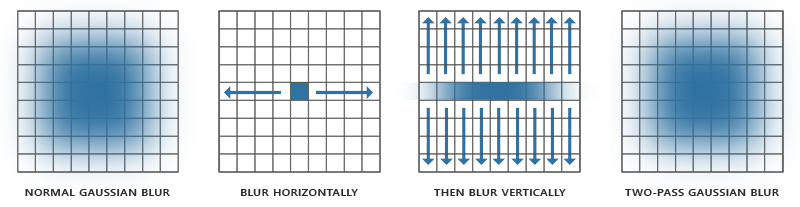

포스트프로세스 (HDR)Luminance (HDR)Bloom (HDR)Eye Adaptation (HDR)Tone Mapping

SSR SSAO SSGI Motion Blur

Depth of Field - Bokeh(geometry / gather) Color Filtering Gamma Control

AA

LOD HLOD Lumen

Vector

Swizzling

벡터의 요소들을 이용 임의의 순서로 구성가능

float4 A = float4(1, 2, 3, 4);

A.x == 1

A.xy == float2(1, 2)

A.wwxy == float4(4, 4, 1, 2)

A.rgba == float4(1, 2, 3, 4)

내적 외적

- 내적과 외적 공식.

- 내적과 외적을 시각적으로 생각할 수 있어야 함.

- 이거 이름 햇갈리기 쉬움.

| 내적 | Dot Product | Inner Product |

- 닷은 점이니까 모이는건 내적

- 점이니까 두개 모아서 하나가 됨.

- 하나로 모이니 두 벡터 사이의 각도를 구할 수 있음.

- 각도니까 cos연산 들어감.

- https://rfriend.tistory.com/145

- 교환법칙이 성립

| 각도 | 값 |

| ---- | --- |

| 0 | 1 |

| 90 | 0 |

| 180 | -1 |

| -270 | 0 |

1

|

|

0-------+------ 0

|

|

-1

| 외적 | Cross Product | Outer Product |

- 크로스는 삐죽하니까 외적으로 외울껏.

- X 니까 삐저나옴.

- X가 직각이니 수직 구할때 씀.

- https://rfriend.tistory.com/146

- 교환법칙 성립안함

Matrix

If w == 1, then the vector (x,y,z,1) is a position in space. If w == 0, then the vector (x,y,z,0) is a direction

// 순서주의

TransformedVector = TranslationMatrix * RotationMatrix * ScaleMatrix * OriginalVector;

// ref: https://www.3dgep.com/3d-math-primer-for-game-programmers-matrices/#Rotation_about_an_arbitrary_axis

이동행렬

| 1 0 0 x |

| 0 1 0 y |

| 0 0 1 z |

| 0 0 0 1 |

스케일

| x 0 0 0 |

| 0 y 0 0 |

| 0 0 z 0 |

| 0 0 0 1 |

X축 회전

| 1 0 0 0 |

| 0 cos -sin 0 |

| 0 sin cos 0 |

| 0 0 0 1 |

Y축 회전

| cos 0 sin 0 |

| 0 1 0 0 |

| -sin 0 cos 0 |

| 0 0 0 1 |

Z축 회전

| cos -sin 0 0 |

| sin cos 0 0 |

| 0 0 1 0 |

| 0 0 0 1 |

임의의 N축 회전

s : sin

c : cos

ic: 1 - cos

| ic * NxNx + c | ic * NxNy - s * Nz | ic * NzNx + s * Ny | 0 |

| ic * NxNy + s * Nz | ic * NyNy + c | ic * NyNz - s * Nx | 0 |

| ic * NzNx - s * Ny | ic * NyNz + s * Nx | ic * NzNz + c | 0 |

| 0 | 0 | 0 | 1 |

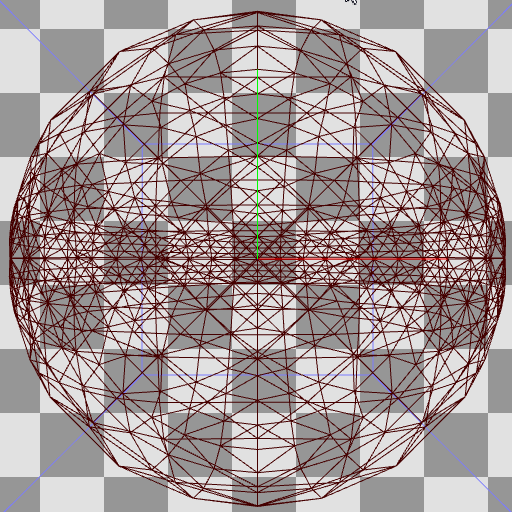

Mesh

// (0,1) +----+ (1,1)

// | |

// (0,0) +----+ (1,0)

//

// 2 +----+ 3

// | |

// 0 +----+ 1

Mesh mesh = new Mesh();

Vector3[] vertices = new Vector3[4] {

new Vector3(0, 0, 0),

new Vector3(1, 0, 0),

new Vector3(0, 1, 0),

new Vector3(1, 1, 0)

};

int[] tris = new int[6] {

// lower left triangle

0, 2, 1,

// upper right triangle

2, 3, 1

};

Vector2[] uv = new Vector2[4] {

new Vector2(0, 0),

new Vector2(1, 0),

new Vector2(0, 1),

new Vector2(1, 1)

};

Vector3[] normals = new Vector3[4] {

-Vector3.forward,

-Vector3.forward,

-Vector3.forward,

-Vector3.forward

};

mesh.vertices = vertices;

mesh.triangles = tris;

mesh.uv = uv;

mesh.normals = normals;

Topology

| MeshTopology |

|---|

| Points |

| Lines |

| LineStrip |

| Triangles |

| Quads |

MehsFilter mf = GetComponent<MeshFilter>();

mf.mesh.SetIndice(mf.mesh.GetIndices(0), MeshTopology.Points, 0);

public void SetIndices(int[] indices, MeshTopology topology, int submesh, bool calculateBounds = true, int baseVertex = 0);

메쉬토폴로지를 변경시켜 좀 더 그럴듯한 효과를 얻을 수 있다.

Ref

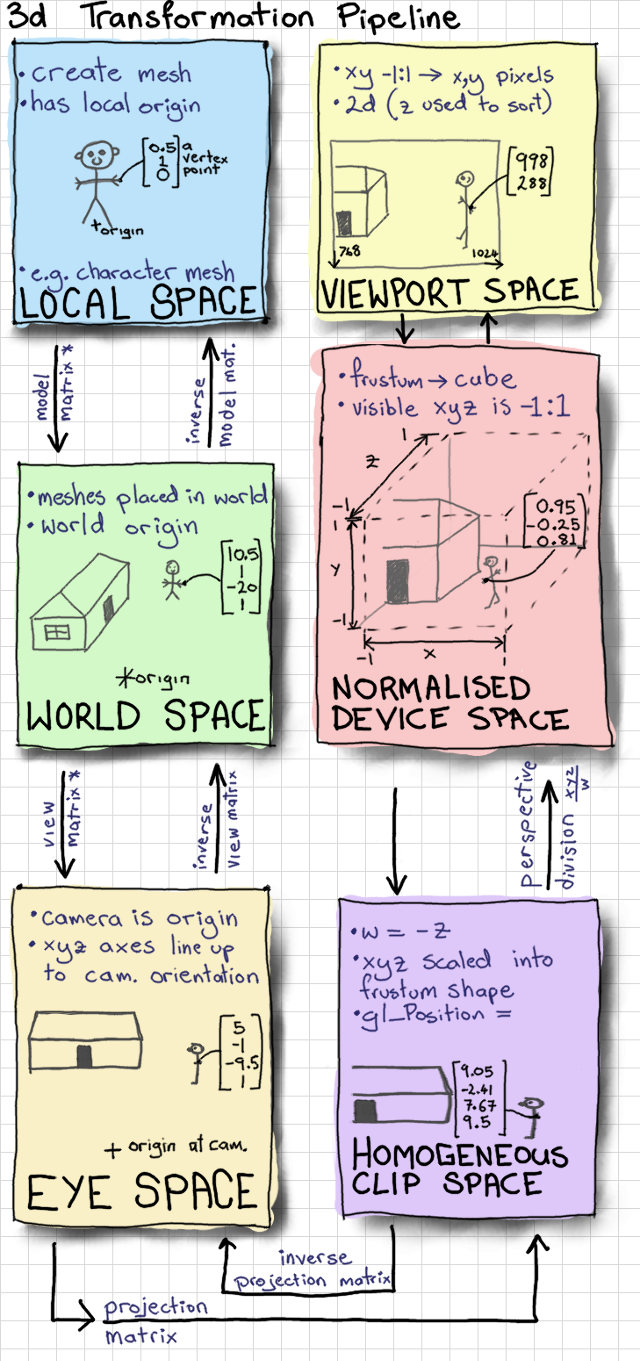

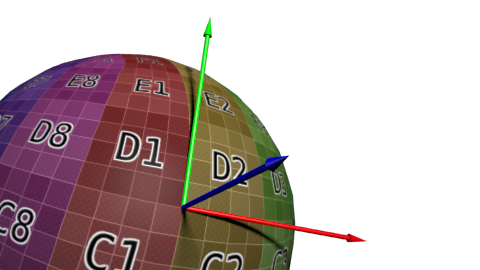

Coordinate

- 좌표공간

유니티 정의 positions

| VertexPositionInputs | Space | AKA | 타입 | 설명 |

|---|---|---|---|---|

| positionOS | Object | Local / Model | float3 | |

| positionWS | World | Global | float3 | |

| positionVS | View | Camera / Eye | float3 | 카메라에서 바라볼때 |

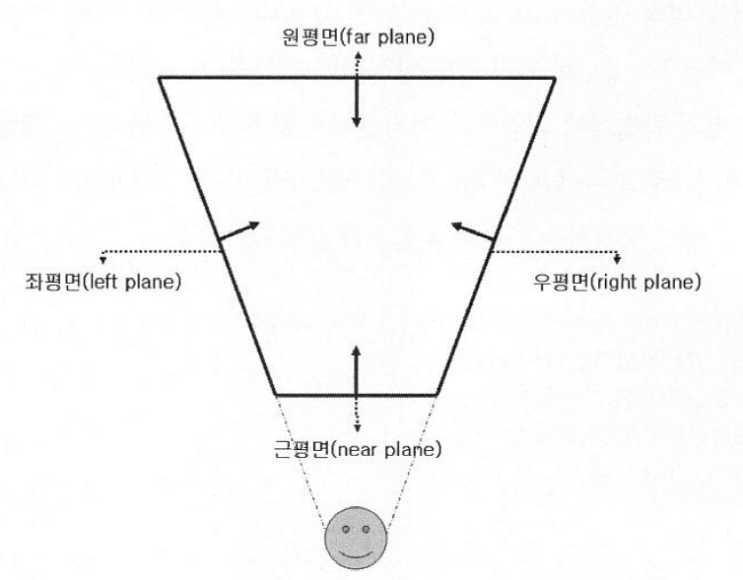

| positionCS | Clip (Homogeneous) | float4 | [-w, w] 카메라 시야에서 안보인 것은 제외, Orthogonal 적용 | |

| positionNDC | Normalized Device Coordinate (Homogeneous) | float4 | [0, w] : (x, y, z, w) |

- 동차좌표 homogeneous coordinate

- 동차좌표는 n차원의 직교좌표를 n+1차원으로 확장한 것이다

- float3 => float4로 되면서 Homogeneous를 붙임

- Perspective-Correct Interpolation을 위해 Normalized Device Coordinate (Homogeneous)를 사용함.

uint2 positionSS; // Screen space pixel coordinates : [0, NumPixels)

uint2 tileCoord; // Screen tile coordinates : [0, NumTiles)

float deviceDepth; // Depth from the depth buffer : [0, 1] (typically reversed)

float linearDepth; // View space Z coordinate : [Near, Far]

OS ----------------------- Object Space

| UNITY_MATRIX_M * OS

WS ----------------------- World Space

| UNITY_MATRIX_V * WS

VS ----------------------- View Space

| UNITY_MATRIX_P * VS

CS ----------------------- Clip Space (Homogeneous)

| NDC = CS * 0.5

| NDC.x = NDC.x + NDC.w

| NDC.y = NDC.y + NDC.w // DirectX

| NDC.y = -NDC.y + NDC.w // OpenGL

| NDC.zw = CS.zw

NDC ---------------------- Normalized Device Coordinate (Homogeneous) [0..w]

| pd = (NDC.xyz / NDC.w); // [0, 1] : perspective divide

| ndc = pd * 2.0 - 1.0; // [-1, 1]

|

|

ndc ---------------------- Normalized Device Coordinate (Nonhomogeneous) [-1..1]

| float2 uv_Screen = positionNDC.xy / positionNDC.w;

| float2 uv_Screen = GetNormalizedScreenSpaceUV(positionCS);

uv_Screen ---------------- [0, 1]

| float2 positionScreen = uv_Screen * _ScreenParams.xy;

positionScreen ----------- [0, screenWidth] / [0, screenHeight]

Transform

- com.unity.render-pipelines.core/ShaderLibrary/SpaceTransforms.hlsl

---

title: Positions

---

flowchart LR

ObjectPos --TransformObjectToWorld--> WorldPos

ObjectPos --TransformObjectToHClip--> HClipPos

WorldPos --TransformWorldToObject--> ObjectPos

WorldPos --TransformWorldToView--> ViewPos

WorldPos --TransformWorldToHClip--> HClipPos

ViewPos --TransformViewToWorld--> WorldPos

ViewPos --TransformWViewToHClip--> HClipPos

---

title: Directions

---

flowchart LR

ObjectDir --TransformObjectToTangent--> TangentDir

ObjectDir --TransformObjectToWorldDir--> WorldDir

TangentDir --TransformTangentToObject--> ObjectDir

TangentDir --TransformTangentToWorldDir --> WorldDir

WorldDir --TransformWorldToTangentDir--> TangentDir

WorldDir --TransformWorldToObjectDir--> ObjectDir

WorldDir --TransformWorldToViewDir--> ViewDir

WorldDir --TransformWorldToHClipDir--> HClipDir

ViewDir --TransformViewToWorldDir--> WorldDir

---

title: Surface Normals

---

flowchart LR

ObjectNormal --TransformObjectToWorldNormal--> WorldNormal

WorldNormal --TransformWorldToViewNormal--> ViewNormal

WorldNormal --TransformWorldToTangent--> TangentNormal

WorldNormal --TransformWorldToObjectNormal--> ObjectNormal

ViewNormal --TransformViewToWorldNormal--> WorldNormal

TangentNormal --TransformTangentToWorld --> WorldNormal

UNITY_MATRIX

| Matrix | 설명 |

|---|---|

| UNITY_MATRIX_M | renderer.localToWorldMatrix |

| UNITY_MATRIX_V | camera.worldToCameraMatrix |

| UNITY_MATRIX_P | GL.GetGPUProjectionMatrix(camera.projectionMatrix, false); |

- localToWorldMatrix

- 유니티 4까지는 GPU에 넘겨주기전에 스케일을 가공하여

- renderer.localToWorldMatrix, transform.localToWorldMatrix가 달랐으나 지금은 같음.

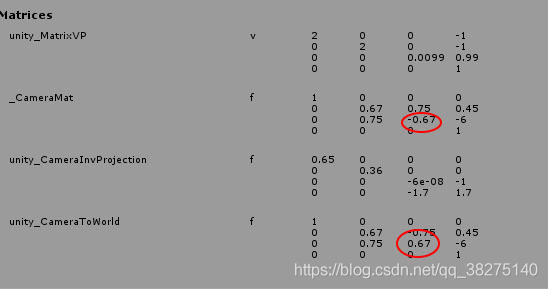

| 카메라 관련 | 렌더링(UNITY_MATRIX_)의 뷰 전방은 -z. 카메라 행렬은 에디터와 동일하게 +z를 앞으로 사용 |

|---|---|

| UNITY_MATRIX_V | cam.worldToCameraMatrix |

| UNITY_MATRIX_P | GL.GetGPUProjectionMatrix(camera.projectionMatrix, false) |

| UNITY_MATRIX_I_V | cam.cameraToWorldMatrix |

| UNITY_MATRIX_I_P | GL.GetGPUProjectionMatrix(camera.projectionMatrix, false).inverse |

| unity_WorldToCamera | Matrix4x4(cam.transform.position, cam.transform.rotation, Vector3.one) |

| unity_CameraToWorld | Matrix4x4(cam.transform.position, cam.transform.rotation, Vector3.one).inverse |

| unity_CameraProjection | cam.projectionMatrix |

| unity_CameraInvProjection | cam.projectionMatrix.inverse |

| _ProjectionParams | x | y | z | w |

|---|---|---|---|---|

| DirectX | 1 | near plane | far plane | 1 / farplane |

| OpenGL | -1 | near plane | far plane | 1 / farplane |

| UNITY_REVERSED_Z | UNITY_NEAR_CLIP_VALUE | UNITY_RAW_FAR_CLIP_VALUE | |

|---|---|---|---|

| DirectX | 1 | 1 | 0 |

| Vulkan | 1 | 1 | 0 |

| OpenGL | 0 | -1 | 1 |

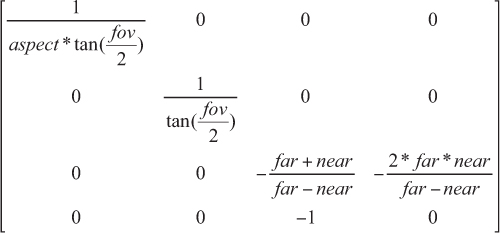

Pserspective Camera

// Find our current location in the camera's projection space.

Vector3 pt = Camera.main.projectionMatrix.MultiplyPoint(transform.position);

// Matrix4x4.MultiplyPoint

public Vector3 MultiplyPoint(Matrix4x4 mat, Vector3 v)

{

Vector3 result;

result.x = mat.m00 * v.x + mat.m01 * v.y + mat.m02 * v.z + mat.m03;

result.y = mat.m10 * v.x + mat.m11 * v.y + mat.m12 * v.z + mat.m13;

result.z = mat.m20 * v.x + mat.m21 * v.y + mat.m22 * v.z + mat.m23;

float num = mat.m30 * v.x + mat.m31 * v.y + mat.m32 * v.z + mat.m33;

num = 1 / num;

result.x *= num;

result.y *= num;

result.z *= num;

return result;

}

// z값 구하지 않으면

public Vector3 MultiplyPoint(Matrix4x4 mat, Vector3 v)

{

Vector3 result;

result.x = mat.m00 * v.x + mat.m01 * v.y + mat.m02 * v.z + mat.m03;

result.y = mat.m10 * v.x + mat.m11 * v.y + mat.m12 * v.z + mat.m13;

float num = mat.m30 * v.x + mat.m31 * v.y + mat.m32 * v.z + mat.m33;

num = 1 / num;

result.x *= num;

result.y *= num;

return result;

}

// 값을 대입하면

public Vector3 MultiplyPoint(Matrix4x4 mat, Vector3 v)

{

Vector3 result;

result.x = mat.m00 * v.x + 0 * v.y + 0 * v.z + 0;

result.y = 0 * v.x + mat.m11 * v.y + 0 * v.z + 0;

float num = 0 * v.x + 0 * v.y + -1 * v.z + 0;

num = 1 / num;

result.x *= num;

result.y *= num;

return result;

}

// 최종적으로

public Vector3 MultiplyPoint(Matrix4x4 mat, Vector3 v)

{

Vector3 result;

result.x = mat.m00 * v.x;

result.y = mat.m11 * v.y;

float num = -1 * v.z;

num = 1 / num;

result.x *= num;

result.y *= num;

return result;

}

(X, Y, linearEyeDepth)

positionNDC // [-1, 1]

X = positionNDC.x * linearEyeDepth / mat.m00

Y = positionNDC.x * linearEyeDepth / mat.m11

The zero-based row-column position:

| _m00, _m01, _m02, _m03 |

| _m10, _m11, _m12, _m13 |

| _m20, _m21, _m22, _m23 |

| _m30, _m31, _m32, _m33 |

The one-based row-column position:

| _11, _12, _13, _14 |

| _21, _22, _23, _24 |

| _31, _32, _33, _34 |

| _41, _42, _43, _44 |

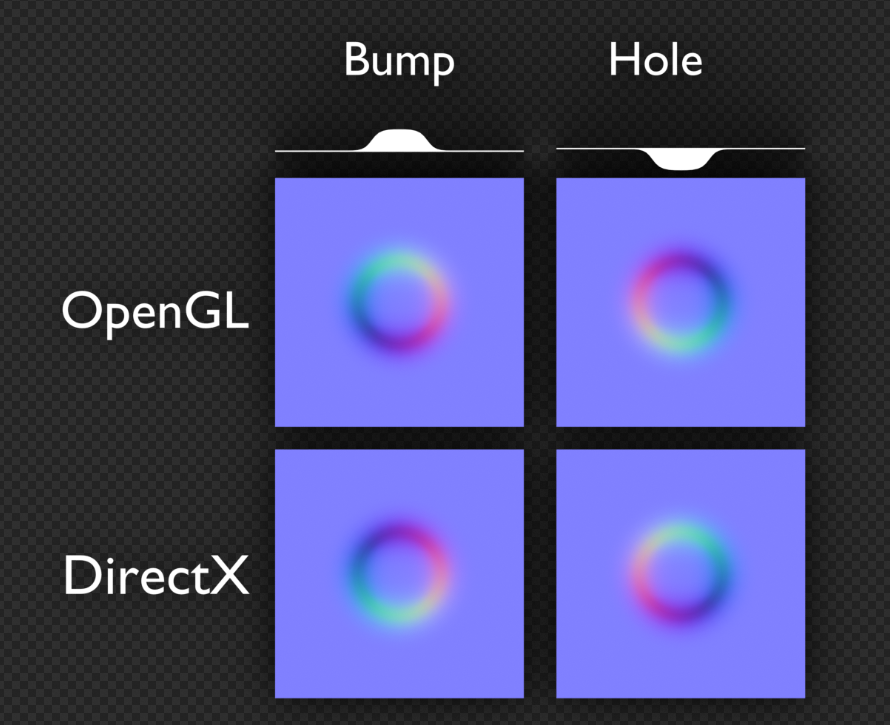

UV

- texel(

TExture + piXEL) coordinate

Direct X

(0,0) (1,0)

+-------+-------+

| | |

| | |

+-------+-------+

| | |

| | |

+-------+-------+

(0,1) (1,1)

OpenGL / UnityEngine

(0,1) (1,1)

+-------+-------+

| | |

| | |

+-------+-------+

| | |

| | |

+-------+-------+

(0,0) (1,0)

- 수학적으로 바라보면 모든 2D좌표계를 OpenGL방식으로하면 좌표계를 헷갈릴 걱정이 없다. 하지만, 프로그래밍 하는 입장에서는 DirectX방식이 좀 더 와닿을 것이다.

Ref

- Computergrafik - Vorlesung 6 - Coordinate Systems

- Unity - shader의 World matrix(unity_ObjectToWorld)를 수작업으로 구성

- Unity - shader의 Camera matrix(UNITY_MATRIX_V)를 수작업으로 구성

- Unity - unity_CameraWorldClipPlanes 내장 변수 의미

- Unity - shader의 원근 투영(Perspective projection) 행렬(UNITY_MATRIX_P)을 수작업으로 구성

- 렌더링 파이프라인의 좌표 공간들

- Look At Transformation Matrix in Vertex Shader

- transform.localToWorldMatrix

- Renderer.localToWorldMatrix

- Camera.worldToCameraMatrix

- Camera.projectionMatrix

- GL.GetGPUProjectionMatrix

- http://blog.hvidtfeldts.net/index.php/2014/01/combining-ray-tracing-and-polygons/

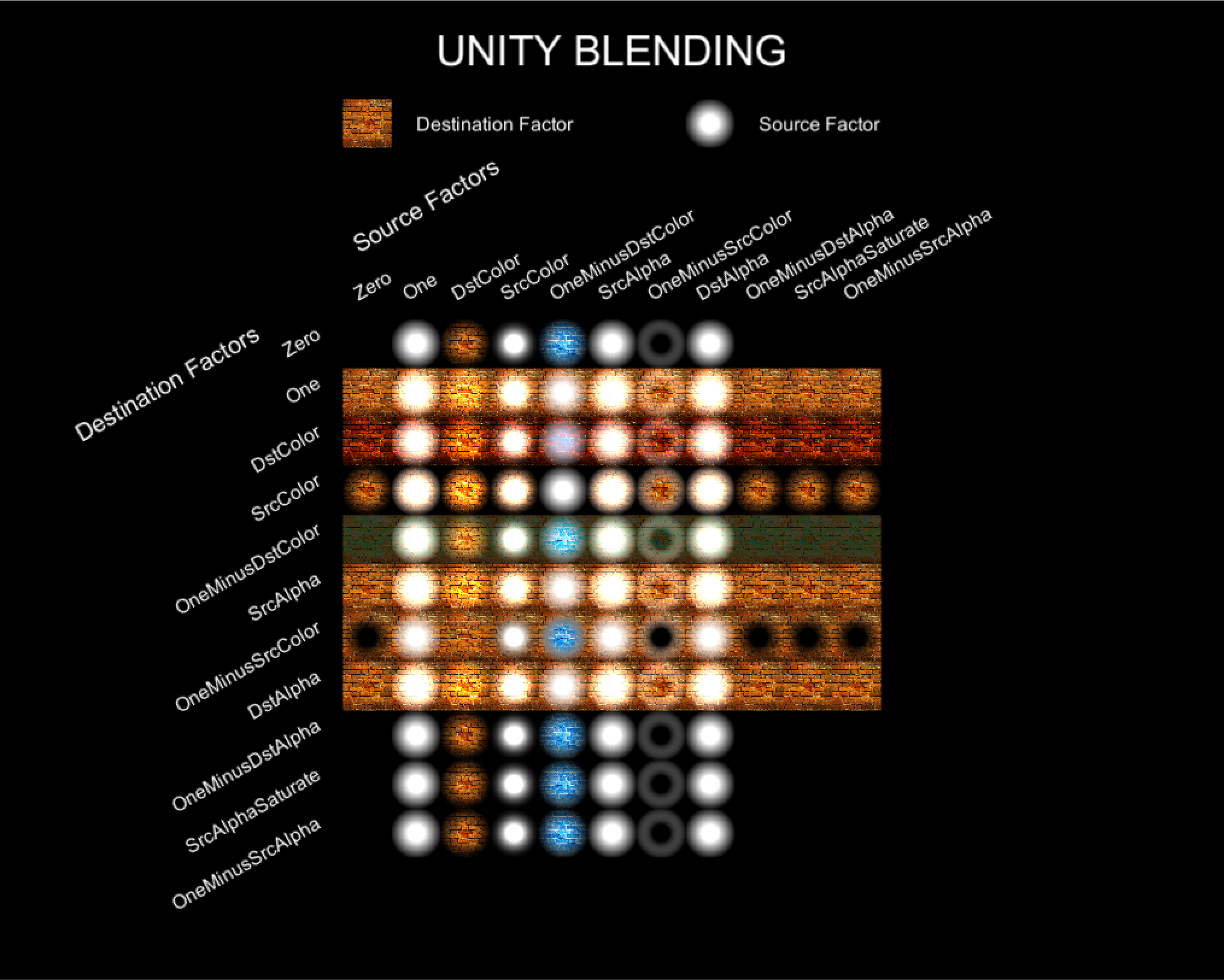

Alpha

- 알파쓰면 Testing(discard)이나 Blend가 할 것 없이 성능 잡아먹는다.

- 구형 모바일 디바이스에선 Blend쪽이 성능이 잘 나오는 경향이 있었다.

SubShader

{

Tags // SubShader의 Tags는 Pass의 Tags와 다름.

{

"RenderPipeline" = "UniversalRenderPipeline"

// "IgnoreProjector" <<< 요놈은 URP에서 안씀

// for cutout

"Queue" = "AlphaTest" // 렌더순서

"RenderType" = "TransparentCutout" // 그룹핑(전체 노말맵같이 한꺼번에 바꾸어 그릴때 이용)

// for blend

"Queue" = "Transparent"

"RenderType" = "Transparent"

}

Pass

{

Tags

{

"LightMode" = "UniversalForward"

}

// https://docs.unity3d.com/Manual/SL-Blend.html

Blend A B

// http://docs.unity3d.com/Manual/SL-CullAndDepth.html

ZWrite <On | Off> // default: On

ZTest <(Less | Greater | LEqual | GEqual | Equal | NotEqual | Always)> // default: LEqual

}

}

- Blend

- 색 혼합 방법

- ZWrite

- Z 값을 기록할지 안할지 결정.

- ZTest

- Z 값이 씌여져 있는 상태를 읽어서(ZRead), 그려져도 되는지를 결정.

Alpha Cutout / Alpha Testing

- clip(texkill)을 이용

- 간편. sorting걱정 안해도 됨.

- 구형 모바일에서는 AlphaBlend 보다 성능이 안나오는 경향이 있음.

- 요즘은 AlphaTesting이 더 낳을지도

- 모바일(A11(ios), PowerVR 등)은 메모리와 대역폭을 줄이기위해 타일별 렌더링을 하는 TBDR(tile-based deferred rendering)을 이용함.

- 알파테스팅을 이용할시, 실제 보여지는지 여부를 알파테스팅이 끝날때까지 알 수 없으므로 Deffered 최적화를 방해함.

- 풀, 나무, 머리카락, 털 등…

- clip하여 너무 각지는게 보기 싫어질 정도면 blend를 잘 쓰자

- // if ZWrite is Off, clip() is fast enough on mobile, because it won’t write the DepthBuffer, so no GPU pipeline stall(confirmed by ARM staff).

SubShader

{

Tags // SubShader의 Tags는 Pass의 Tags와 다름.

{

"RenderPipeline" = "UniversalRenderPipeline"

"Queue" = "AlphaTest"

"RenderType" = "TransparentCutout"

}

Pass

{

Tags

{

"LightMode" = "UniversalForward"

}

HLSLPROGRAM

...

half4 frag(VStoFS IN) : SV_Target

{

half alpha = ...;

clip(alpha - _Cutoff);

return half4(1, 0, 0, 1);

}

half4

ENDHLSL

}

}

// URP에선 `_ALPHATEST_ON` 여부로 할지 말지 결정하는 함수가 있다.

// https://github.com/Unity-Technologies/Graphics/blob/master/com.unity.render-pipelines.universal/ShaderLibrary/ShaderVariablesFunctions.hlsl

void AlphaDiscard(real alpha, real cutoff, real offset = real(0.0))

{

#ifdef _ALPHATEST_ON

clip(alpha - cutoff + offset);

#endif

}

Alpha Blend

- 이펙트에서 주로 쓰임

- Alpha Testing보다 디테일 살릴때…

- 불투명 유리

SubShader

{

Tags // SubShader의 Tags는 Pass의 Tags와 다름.

{

"RenderPipeline" = "UniversalRenderPipeline"

"Queue" = "Transparent"

"RenderType" = "Transparent"

}

Pass

{

ZWrite Off // 픽셀 중복으로 출력됨.

Blend SrcAlpha OneMinusSrcAlpha

Tags

{

"LightMode" = "UniversalForward"

}

}

}

ZWrite Off- 뒷면까지 렌더링하는게 문제가됨- 2Pass로 보이는면 랜더링

SubShader

{

Tags // SubShader의 Tags는 Pass의 Tags와 다름.

{

"RenderPipeline" = "UniversalRenderPipeline"

"Queue" = "Transparent"

"RenderType" = "Transparent"

}

Pass

{

Tags

{

"LightMode" = "SRPDefaultUnlit"

}

ZWrite On

ColorMask 0 // 색 렌더링 안함

Cull Front

HLSLPROGRAM

...

ENDHLSL

}

Pass

{

Tags

{

"LightMode" = "UniversalForward"

}

ZWrite Off

Cull Back

Blend SrcAlpha OneMinusSrcAlpha

HLSLPROGRAM

...

ENDHLSL

}

}

Ref

- Jihoo Oh - 이펙트 쉐이더 2강 - 알파 / 블랜딩

- https://www.gamedev.net/forums/topic/656826-why-the-alphablend-is-a-better-choice-than-alphatest-to-implement-transparent-on-mobile-device/5154785/

- 모바일 기기의 Tile Based Rendering(타일 기반 렌더링)과 유니티에서의 주의 사항 #2 : TBR 대응 리소스 제작시 주의점

- https://blog.naver.com/dasoong15/221356325748

- PowerVR Performance Recommendations The Golden Rules

- Z 버퍼의 Read / Write 개념 by 김윤정

NormalMap

inline void ExtractTBN(in half3 normalOS, in float4 tangent, inout half3 T, inout half3 B, inout half3 N)

{

N = TransformObjectToWorldNormal(normalOS);

T = TransformObjectToWorldDir(tangent.xyz);

B = cross(N, T) * tangent.w * unity_WorldTransformParams.w;

}

inline half3 CombineTBN(in half3 tangentNormal, in half3 T, in half3 B, in half3 N)

{

return mul(tangentNormal, float3x3(normalize(T), normalize(B), normalize(N)));

}

Varyings vert(Attributes IN)

{

ExtractTBN(IN.normalOS, IN.tangent, OUT.T, OUT.B, OUT.N);

}

half4 frag(Varyings IN) : SV_Target

{

half3 normalTex = UnpackNormal(SAMPLE_TEXTURE2D(_NormalTex, sampler_NormalTex, IN.uv));

half3 N = CombineTBN(normalTex, IN.T, IN.B, IN.N);

}

- NormalMap(법선맵)을 쓰는 이유?

- TBN이란?

- world-Normal 구하는 법?

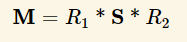

- 노말맵 혹은 법선맵(tangent space)에서 g채널을 뒤집는 이유?

법선맵을 쓰는 이유

- 정점(vertex)을 많이 밖아서 디테일을 표시하면, 실시간으로 정점을 처리하는데 부하가 걸린다(주로 CPU).

- 셰이더 계산시 법선맵에서 가상의 정점을 생성해 빛을 계산하면 디테일을 살릴 수 있다.

Object Space vs Tangent Space

- 리깅을 사용하는 모델의 경우 정점이 몰핑되면서 노말 벡터의 방향이 바뀌게 되는데 이때는 고정된 오브젝트 의 공간좌표계는 의미가 없어짐.

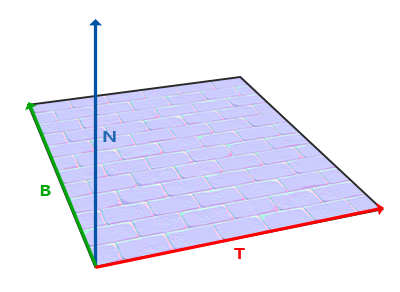

TBN

| TBN | Source | xyz | UV |

|---|---|---|---|

Tangent | TANGENT | x | u |

Binormal | cross(T, N) | y | v |

Normal | NORMAL | z |

N = mul(mat_I_M, normalOS);

T = mul(tangentOS, mat_M);

B = mul(binormalOS, mat_M);

// unity같이 binormalOS를 못어올 경우 N, T를 이용하여 B를 만들 수 있다.

// B = cross(N, T) * tangentOS.w

======== 월드공간 T / B / N 을 구하고 TBN매트릭스(tangent -> world)를 만든다

float3x3 TBN_Tangent2World = float3x3(normalize(Input.T), normalize(Input.B), normalize(Input.N));

| Tx Ty Tz |

| Bx By Bn |

| Nx Ny Nz |

mul(tangentNormal, TBN_Tangent2World); // 왠지 이케 해버리면 앞서 말한 NormalScaleProblem에 걸릴것 같음

======== TBN은 직교행렬, 직교행렬의 역행렬은 전치행렬.

TBN_World2Tangent = transpose(TBN_Tangent2World);

| Tx Bx Nx |

| Ty By Ny |

| Yz Bz Nz |

mul(TBN_World2Tangent, tangentNormal); // 이케하면 되겠지?

======== 뇌피셜

// 위에꺼도 맞긴 맞는데...

// TBN은 직교행렬, 직교행렬의 역행렬은 전치행렬.

// traspose(inverse(M)) == M

mul(tangentNormal, TBN_Tangent2World); // 따라서 이케해도 문제될꺼 없음? 확인해봐야함

normal flatten

// T, B, N

const float3 vec_TBN_UP = float3(0, 0, 1);

normalTS = lerp(normalTS, vec_TBN_UP, _Flatteness);

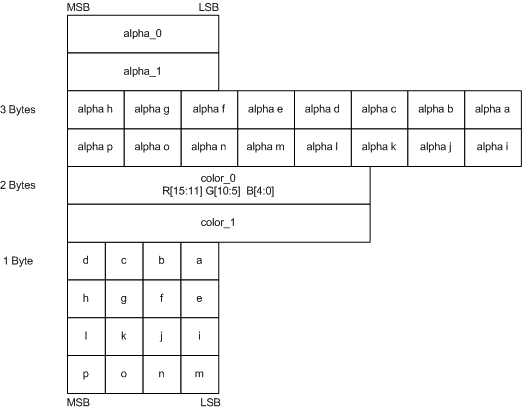

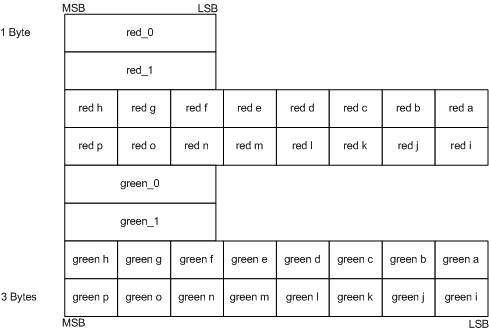

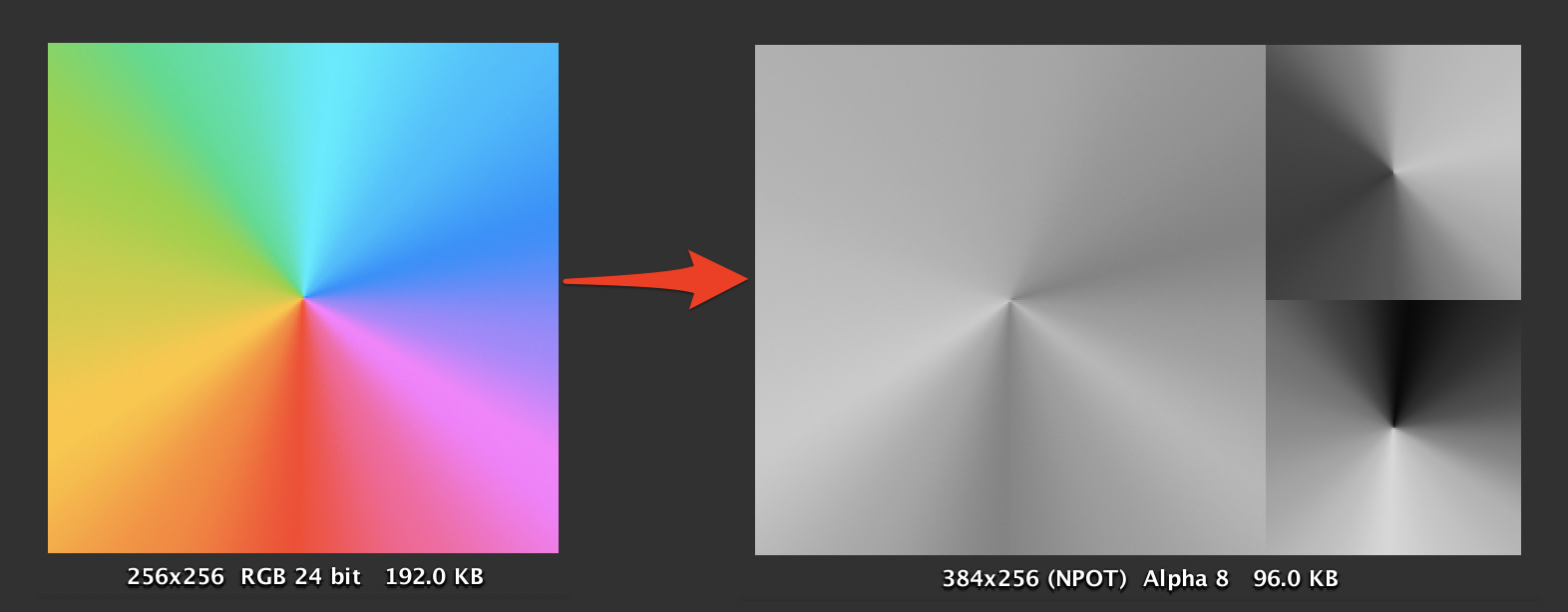

Block Compression

| DXT5 | BC3 format | (x, y, 0, 1) |

| DXT5nm | DXT5의 R채널값이 A채널로 이동된것 | (1, y, 0, x) |

| BC3 | channel | bit |

|---|---|---|

| x | a0, a1 | 16 |

| alpha indices | 48 | |

| y | color0,1 | 32 |

| color indices | 32 |

| BC5 | channel | bit |

|---|---|---|

| x | r0, r1 | 16 |

| red indices | 48 | |

| y | g0, g1 | 32 |

| green indices | 32 |

UNITY_NO_DXT5nm

DXT5nm이 아닌 경우(UNITY_NO_DXT5nm) 는 다음과 같은 공식을 썼으나,

real3 UnpackNormalRGBNoScale(real4 packedNormal)

{

return packedNormal.rgb * 2.0 - 1.0;

}

아닌경우 UnpackNormalmapRGorAG을 사용 DXT5, DXT5nm을 처리할 수 있게한다.

real3 UnpackNormal(real4 packedNormal)

{

#if defined(UNITY_ASTC_NORMALMAP_ENCODING)

return UnpackNormalAG(packedNormal, 1.0);

#elif defined(UNITY_NO_DXT5nm)

return UnpackNormalRGBNoScale(packedNormal);

#else

// Compiler will optimize the scale away

return UnpackNormalmapRGorAG(packedNormal, 1.0);

#endif

}

// Unpack normal as DXT5nm (1, y, 0, x) or BC5 (x, y, 0, 1)

real3 UnpackNormalmapRGorAG(real4 packedNormal, real scale = 1.0)

{

// Convert to (?, y, 0, x)

packedNormal.a *= packedNormal.r;

return UnpackNormalAG(packedNormal, scale);

}

real3 UnpackNormalAG(real4 packedNormal, real scale = 1.0)

{

real3 normal;

normal.xy = packedNormal.ag * 2.0 - 1.0;

normal.z = max(1.0e-16, sqrt(1.0 - saturate(dot(normal.xy, normal.xy))));

// must scale after reconstruction of normal.z which also

// mirrors UnpackNormalRGB(). This does imply normal is not returned

// as a unit length vector but doesn't need it since it will get normalized after TBN transformation.

// If we ever need to blend contributions with built-in shaders for URP

// then we should consider using UnpackDerivativeNormalAG() instead like

// HDRP does since derivatives do not use renormalization and unlike tangent space

// normals allow you to blend, accumulate and scale contributions correctly.

normal.xy *= scale;

return normal;

}

- xyzw, wy => _g_r => rg => xyn // r이 뒤로 있으므로, 한바퀴 돌려줘야함.

normal.xy = packednormal.wy * 2 - 1;(0 ~ 1 => -1 ~ 1)Z는 쉐이더에서 계산. 단위 벡터의 크기는 1인것을 이용.(sqrt(x^2 + y^2 + z^2) = 1)sqrt(1 - saturate(dot(normal.xy, normal.xy)))

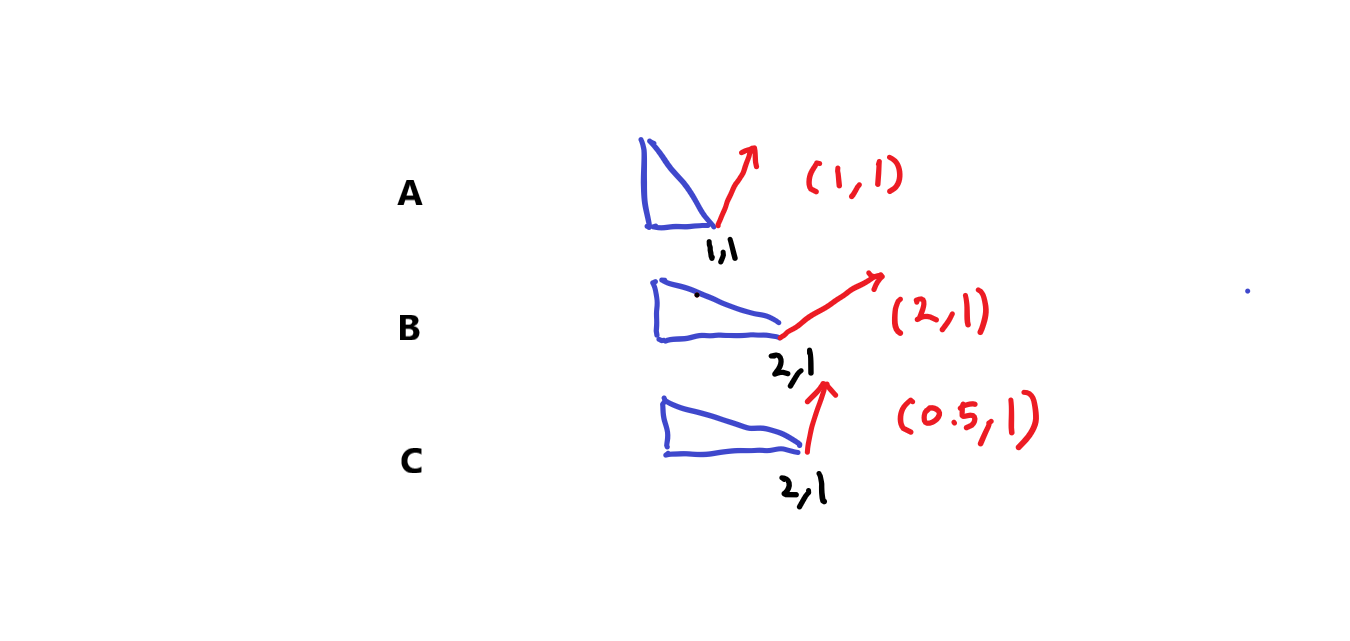

Normal Scale Problem

오브젝트를 스케일시킬때 Normal의 변화의 문제

A라는 도형을 x에 대해서 2만큼 스케일 업하고 싶다고 가정하면,

| 정점 | x 스케일 | 노말 | |

|---|---|---|---|

| A | (1, 1) | 1 | (1, 1) |

| B | (2, 1) | 2 | (2, 1) |

| C | (2, 1) | 2 | (0.5, 1) |

C처럼 x의 스케일 2배 됐다고, 노멀의 x값에 곱하기 2를 해서는 안된다. 역인 나누기 2 를 해야한다.

위치(position)에 대해서는 world-Position = mul(obj-Position, M )이 정상적으로 성립되었다.

하지만, world-Normal = mul( obj-Normal, M ) 처럼 적용했을시 앞+선 B와 같은 문제가 발생한다.

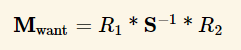

월드행렬(M)식으로 나타내면

우리가 구하고 싶은 행렬을 M-want라 했을시 world-Normal = mul(M-want, obj-Normal)

즉 M-want = traspose(inverse(M)).

DirectX기준 row-major에서의 메트릭스와 벡터의 인자 순서: mul(벡터, 메트릭스) = mul( transpose(메트릭스), 벡터 )

아레 예는 row-major 기준으로 작성.

M = ObjectToWorld

inverse(M) = WorldToObject

M-want = traspose(inverse(M))

world-Normal

= mul(obj-Normal , M-want )

= mul(obj-Normal , traspose(inverse(M)))

= mul(inverse(M) , obj-Normal )

= mul(WorldToObject, obj-Normal )

노말맵 혹은 법선맵(tangent space)에서 g채널을 뒤집는 이유

-

https://victorkarp.wordpress.com/2020/06/26/inverting-a-normal-map-in-blender/

-

단위벡터의 크기가 1이지만, (-1, 0, 0)과 같은게 있으므로, 정규화된 법선벡터의 범위는

-1...1이다. -

바이너리로 저장하기위해 범위를

0...1로 줄이려면 0.5를 곱하고 다시 0.5를 더해주면 된다. -

셰이더에서 저장된 범위

0...1을-1...1로 확장시키려면 2를 곱하고 1을 빼주면-1...1의 범위로 확장된다.

노말맵에서 z값이 강한 경우가 있는데 그럼 이미지가 퍼렇게 보이면서 돌출이 아닌 움푹 패인듯한 느낌이 든다.

- 표면 안쪽으로 향하는(z의 값이 음수인) 경우가 없다.

- 범위는

0 ~ 1 - 바이너리 저장시 범위가

0.5 ~ 1로 변경되면서 0.5부터 값이 시작된다.

- 범위는

- 따라서 맵이 퍼렇게 보이면서, 돌출되는 부분이 이미지상 움푹들어간 모습처럼 보인다.

그러므로, g채널을 뒤집어주면 돌출된 부분을 아티스트가 쉽게 인지할 수 있다.

OpenGLY+ / DirectX Y-

- 유니티는 OpenGL(Y+) 를 써서 보기 비교적 편하다.

- DirectX와 같은 엔진에서는 작업자를 위해 Y+텍스쳐 제작 쉐이더에서 y에

-1을 곱해 뒤집어 주는 코드를 넣어주면 작업자들이 편해진다.

MikkTSpace

- Mikkelsen tangent space normal

| URP | 셰이더 그래프 | URP Lit |

|---|---|---|

| 8.1.0 | 픽셀당 MikkTSpace 노멀 맵 | 정점당 |

| 8.2.0 | 픽셀당 | 픽셀당 |

- 노말맵 베이크

- 방식1. 하이트맵이나 일반 이미지를 이용해서 노멀맵 변환

- 방식2. 로우폴리곤과 하이폴리곤을 가지고 베이크

- 3D프로그램 별 에버레지 버텍스 노말에 동일한 연산을 하지 않음.

- 동일한 연산을 하도록 MikkTSpace로 통일.

- 3D프로그램 별 에버레지 버텍스 노말에 동일한 연산을 하지 않음.

Ref

- youtube: 안콜3D - (전체공개) 노말맵의 모든것

- xNormal 프로그램

- https://bgolus.medium.com/generating-perfect-normal-maps-for-unity-f929e673fc57

Cubemap

- 텍스쳐를 받을 수 있는 Cubemap생성하기 :

Create > Legacy > Cubemap

TEXTURECUBE(_CubeMap); SAMPLER(sampler_CubeMap);

half3 reflectVN = reflect(-V, N);

half4 cubeReflect = SAMPLE_TEXTURECUBE_LOD(_CubeMap, sampler_CubeMap, reflectVN, 0);

half3 refractVN = refract(-V, N, 1 / _RefractiveIndex);

half4 cubeRefract = SAMPLE_TEXTURECUBE_LOD(_CubeMap, sampler_CubeMap, refractVN, 0);

CubemapGen

- https://gpuopen.com/archived/cubemapgen/

- https://seblagarde.wordpress.com/2012/06/10/amd-cubemapgen-for-physically-based-rendering/

- https://github.com/gscept/CubeMapGen

// | AMD CubeMapGen | Unity |

// | -------------- | ----- |

// | X+ | -X |

// | X- | +X |

// | Y+ | +Y |

// | Y- | -Y |

// | Z+ | +Z |

// | Z- | -Z |

using System.IO;

using UnityEditor;

using UnityEngine;

public class BakeStaticCubemap : ScriptableWizard

{

static string imageDirectory = "Assets/CubemapImages";

static string[] cubemapImage = new string[6] {

"top+Y", "bottom-Y",

"left-X", "right+X",

"front+Z","back-Z",

};

static Vector3[] eulerAngles = new Vector3[6] {

new Vector3(-90.0f, 0.0f, 0.0f), new Vector3(90.0f, 0.0f, 0.0f),

new Vector3(0.0f, 90.0f, 0.0f), new Vector3(0.0f, -90.0f, 0.0f),

new Vector3(0.0f, 0.0f, 0.0f), new Vector3(0.0f, 180.0f, 0.0f),

};

public Transform renderPosition;

public Cubemap cubemap;

// Camera settings.

public int cameraDepth = 24;

public LayerMask cameraLayerMask = -1;

public Color cameraBackgroundColor;

public float cameraNearPlane = 0.1f;

public float cameraFarPlane = 2500.0f;

public bool cameraUseOcclusion = true;

// Cubemap settings.

public FilterMode cubemapFilterMode = FilterMode.Trilinear;

// Quality settings.

public int antiAliasing = 4;

public bool IsCreateIndividualImages = false;

[MenuItem("GameObject/Bake Cubemap")]

static void RenderCubemap()

{

DisplayWizard("Bake CubeMap", typeof(BakeStaticCubemap), "Bake!");

}

void OnWizardUpdate()

{

helpString = "Set the position to render from and the cubemap to bake.";

if (renderPosition != null && cubemap != null)

{

isValid = true;

}

else

{

isValid = false;

}

}

void OnWizardCreate()

{

QualitySettings.antiAliasing = antiAliasing;

cubemap.filterMode = cubemapFilterMode;

GameObject go = new GameObject("CubemapCam", typeof(Camera));

go.transform.position = renderPosition.position;

go.transform.rotation = Quaternion.identity;

Camera camera = go.GetComponent<Camera>();

camera.depth = cameraDepth;

camera.backgroundColor = cameraBackgroundColor;

camera.cullingMask = cameraLayerMask;

camera.nearClipPlane = cameraNearPlane;

camera.farClipPlane = cameraFarPlane;

camera.useOcclusionCulling = cameraUseOcclusion;

camera.RenderToCubemap(cubemap);

if (IsCreateIndividualImages)

{

if (!Directory.Exists(imageDirectory))

{

Directory.CreateDirectory(imageDirectory);

}

RenderIndividualCubemapImages(camera);

}

DestroyImmediate(go);

}

void RenderIndividualCubemapImages(Camera camera)

{

camera.backgroundColor = Color.black;

camera.clearFlags = CameraClearFlags.Skybox;

camera.fieldOfView = 90;

camera.aspect = 1.0f;

camera.transform.rotation = Quaternion.identity;

for (int camOrientation = 0; camOrientation < eulerAngles.Length; camOrientation++)

{

string imageName = Path.Combine(imageDirectory, cubemap.name + "_" + cubemapImage[camOrientation] + ".png");

camera.transform.eulerAngles = eulerAngles[camOrientation];

RenderTexture renderTex = new RenderTexture(cubemap.height, cubemap.height, cameraDepth);

camera.targetTexture = renderTex;

camera.Render();

RenderTexture.active = renderTex;

Texture2D img = new Texture2D(cubemap.height, cubemap.height, TextureFormat.RGB24, false);

img.ReadPixels(new Rect(0, 0, cubemap.height, cubemap.height), 0, 0);

RenderTexture.active = null;

DestroyImmediate(renderTex);

byte[] imgBytes = img.EncodeToPNG();

File.WriteAllBytes(imageName, imgBytes);

AssetDatabase.ImportAsset(imageName, ImportAssetOptions.ForceUpdate);

}

AssetDatabase.Refresh();

}

}

Etc

- 좋은 큐브맵

- https://youtu.be/mnuKwAV-MBA?si=g-NaYv2cyln2jQes&t=239

- 밝고 어둠/ 중간명도 /반사광이 충분히 포함

- GI가 표현될 수 있는 밝음

- 태양 반대편에 빛을 받는 물체가 있어야 함

- 라이팅셋팅

- https://youtu.be/mnuKwAV-MBA?si=HZsut7AbGQ0C-OPE&t=576

- albedo 명도 벨런스 필수

- 팔레트준비

- 색온도 조절이 편함

- 하지만, 노을질때든지 라이트가 너무 진하면

- directional light색온도 대신 postprocess 색온도 활용

- 하지만, 노을질때든지 라이트가 너무 진하면

- 대기

- https://youtu.be/mnuKwAV-MBA?si=JGrQpz3kCFf7M3i1&t=747

- atmospheric fog - 하늘,대기

- exponential

Ref

- https://developer.arm.com/documentation/102179/0100/Implement-reflections-with-a-local-cubemap

- NDC2011 - PRT(Precomputed Radiance Transfer) 및 SH(Spherical Harmonics) 개괄

Stencil

- vert > Depth Test > Stencil Test > Render

- frag > AlphaTest > Blending

| ZTest | 깊이 버퍼 비교 후 색상 입히기 | 기본값 LEqual이기에 카메라 가까운걸 나중에 그림 |

| ZWrite | 깊이 버퍼에 쓰기 시도 | ZTest 성공해야 깊이 버퍼에 쓸 수 있음 |

| ZWrite On | ZWrite Off | |

|---|---|---|

| ZTest 성공 | 깊이 O / 색상 O | 깊이 X / 색상 O |

| ZTest 실패 | 깊이 X / 색상 X | 깊이 X / 색상 X |

| ZTest 예 | |

|---|---|

| ZTest LEqual | 물체가 앞에 있다 |

| ZTest Greater | 물체가 가려져 있다 |

템플릿

// 기본값

Pass

{

Stencil

{

Ref 0 // [0 ... 255]

ReadMask 255 // [0 ... 255]

WriteMask 255 // [0 ... 255]

Comp Always

Pass Keep

Fail Keep

ZFail Keep

}

ZWrite On // On | Off

ZTest LEqual // Less | Greater | LEqual | GEqual | Equal | NotEqual | Always

}

Properties

{

[IntRange]

_StencilRef("Stencil ID [0-255]", Range(0, 255)) = 0

[IntRange]

_StencilReadMask("ReadMask [0-255]", Range(0, 255)) = 255

[IntRange]

_StencilWriteMask("WriteMask [0-255]", Range(0, 255)) = 255

[Enum(UnityEngine.Rendering.CompareFunction)]

_StencilComp("Stencil Comparison", Float) = 8 // Always

[Enum(UnityEngine.Rendering.StencilOp)]

_StencilPass("Stencil Pass", Float) = 0 // Keep

[Enum(UnityEngine.Rendering.StencilOp)]

_StencilFail("Stencil Fail", Float) = 0 // Keep

[Enum(UnityEngine.Rendering.StencilOp)]

_StencilZFail("Stencil ZFail", Float) = 0 // Keep

}

Pass

{

Stencil

{

Ref [_StencilRef]

ReadMask [_StencilReadMask]

WriteMask [_StencilWriteMask]

Comp [_StencilComp]

Pass [_StencilPass]

Fail [_StencilFail]

ZFail [_StencilZFail]

}

}

table

| 구분 | 기본값 | |

|---|---|---|

| Ref | - | 버퍼에 기록 |

| ReadMask | 255 | |

| WriteMask | 255 | |

| Comp | Always | |

| Pass | Keep | 스텐실 테스트 성공시 |

| Fail | Keep | 스텐실 테스트 실패시 |

| ZFail | Keep | 스텐실 테스트 성공시 && ZTest 실패시 |

| Comp | 값 | |

|---|---|---|

| Never | false | 1 |

| Less | 버퍼 > 참조 | 2 |

| Equal | 버퍼 == 참조 | 3 |

| LEqual | 버퍼 >= 참조 | 4 |

| Greater | 버퍼 < 참조 | 5 |

| NotEqual | 버퍼 != 참조 | 6 |

| GEqual | 버퍼 <= 참조 | 7 |

| Always | true | 8 |

| 스텐실 | 값 | |

|---|---|---|

| Keep | 변화 없음 | 0 |

| Zero | 0 | 1 |

| Replace | 참조 값 | 2 |

| IncrSat | 증가. 최대 255 | 3 |

| DecrSat | 감소. 최소 0 | 4 |

| Invert | 반전 | 5 |

| IncrWarp | 증가. 255면 0으로 | 6 |

| DecrWarp | 감소. 0이면 255로 | 7 |

Ex

마스킹

- ZTest 실패: 깊이 X / 색상 X

// 마스크.

// 일부러 비교(Comp)하지 않아서 실패상태로 만들고(Fail) Ref값을 덮어씌운다(Replace).

// 마스킹 작업이 오브젝트 보다 먼저 렌더링 되어야 함으로, 렌더큐 확인.

Stencil

{

Ref 1

Comp Never

Fail Replace

}

// 오브젝트.

// 앞서 마스크가 1로 덮어씌운 부분과 같은지 비교(Equal).

// 마스킹 작업이 오브젝트 보다 먼저 렌더링 되어야 함으로, 렌더큐 확인.

Stencil

{

Ref 1

Comp Equal

}

실루엣

- 가려져 있는 물체 그리기

-

- 일반

- 스텐실 버퍼 Write

-

- 가려지면

- 가려져있는가 : ZTest Greater

- 스텐실 버퍼 비교

-

Pass

{

Tags

{

"LightMode" = "SRPDefaultUnlit"

}

ZTest Greater

ZWrite Off

Stencil

{

Ref 2

Comp NotEqual

}

}

Pass

{

Tags

{

"LightMode" = "UniversalForward"

}

Stencil

{

Ref 2

Pass Replace

}

}

Ref

- https://docs.unity3d.com/Manual/SL-CullAndDepth.html

- https://docs.unity3d.com/Manual/SL-Stencil.html

- https://www.ronja-tutorials.com/post/022-stencil-buffers/

- https://rito15.github.io/posts/unity-transparent-stencil/#스텐실

- 유니티 URP 멀티 렌더 오브젝트 기법으로 겹쳐진면 투명화하기

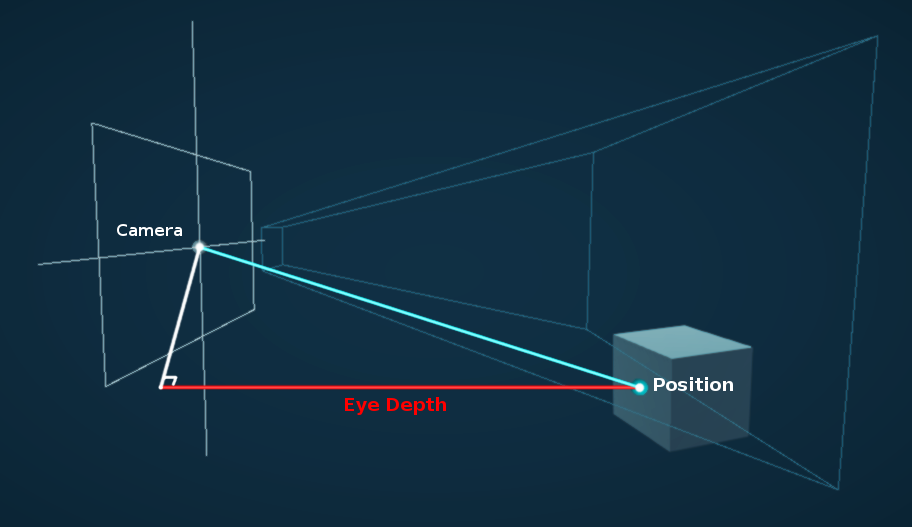

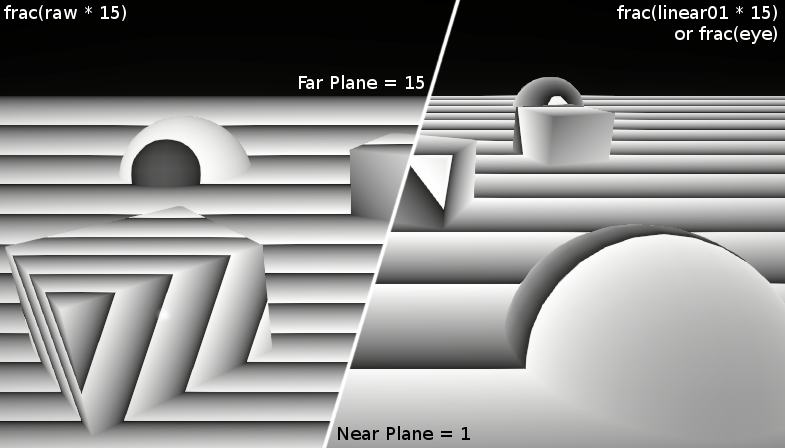

Depth

- LinearEyeDepth : distance from the eye in world units

- Linear01Depth : distance from the eye in [0;1]

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/DeclareDepthTexture.hlsl"

half3 pd = IN.positionNDC.xyz / IN.positionNDC.w; // perspectiveDivide

half2 uv_Screen = pd.xy;

half sceneRawDepth = SampleSceneDepth(uv_Screen);

half sceneEyeDepth = LinearEyeDepth(sceneRawDepth, _ZBufferParams);

half scene01Depth = Linear01Depth (sceneRawDepth, _ZBufferParams);

// mirror: com.unity.render-pipelines.universal/ShaderLibrary/DeclareDepthTexture.hlsl

float SampleSceneDepth(float2 uv)

{

return SAMPLE_TEXTURE2D_X(_CameraDepthTexture, sampler_CameraDepthTexture, UnityStereoTransformScreenSpaceTex(uv)).r;

}

// mirror: com.unity.render-pipelines.core/ShaderLibrary/Common.hlsl

// Z buffer to linear 0..1 depth (0 at camera position, 1 at far plane).

// Does NOT work with orthographic projections.

// Does NOT correctly handle oblique view frustums.

// zBufferParam = { (f-n)/n, 1, (f-n)/n*f, 1/f }

float Linear01Depth(float depth, float4 zBufferParam)

{

return 1.0 / (zBufferParam.x * depth + zBufferParam.y);

}

// Z buffer to linear depth.

// Does NOT correctly handle oblique view frustums.

// Does NOT work with orthographic projection.

// zBufferParam = { (f-n)/n, 1, (f-n)/n*f, 1/f }

float LinearEyeDepth(float depth, float4 zBufferParam)

{

return 1.0 / (zBufferParam.z * depth + zBufferParam.w);

}

| _ZBufferParams | x | y | z | w |

|---|---|---|---|---|

| DirectX | -1 + far/near | 1 | x/far | 1/far |

| OpenGL | 1 - far/near | far/near | x/far | y/far |

depth buffer value non-linear (in view space)

Sample

// vert

float currEyeDepth = -positionVS.z;

float curr01Depth = -positionVS.z * _ProjectionParams.w;

float4 positionNDC = GetVertexPositionInputs(positionOS).positionNDC;

// frag

half2 uv_Screen = IN.positionNDC.xy / IN.positionNDC.w;

half sceneRawDepth = SampleSceneDepth(uv_Screen);

// --------------------------------------------

half scene01Depth = Linear01Depth(sceneRawDepth, _ZBufferParams); // [near/far, 1]

// -----------------------------------------------

// scene01Depth을 _ProjectionParams.z(far plane)으로 늘리면 sceneEyeDepth

half sceneEyeDepth = scene01Depth * _ProjectionParams.z; // [near, far]

half sceneEyeDepth = LinearEyeDepth(sceneRawDepth, _ZBufferParams); // [near, far]

// -----------------------------------------------

// 물체와의 거리를 빼면, 얼마나 앞에 나와있는지 알 수 있다.

half diffEyeDepth = sceneEyeDepth - IN.currEyeDepth;

half intersectGradient = 1 - min(diffEyeDepth, 1.0f);

Reversed-z

TODO

ReconstructPositionWS

TODO 둘 중 하나 문제있음

// https://www.cyanilux.com/tutorials/depth

OUT.toViewVectorWS = _WorldSpaceCameraPos - vertexInputs.positionWS;

float2 screenUV = (IN.positionNDC.xy / IN.positionNDC.w);

float sceneRawDepth = SampleSceneDepth(screenUV);

float sceneEyeDepth = LinearEyeDepth(sceneRawDepth, _ZBufferParams);

float fragmentEyeDepth = -IN.positionVS.z;

float3 scenePositionWS = _WorldSpaceCameraPos + (-IN.toViewVectorWS / fragmentEyeDepth) * sceneEyeDepth;

// https://docs.unity3d.com/Packages/com.unity.render-pipelines.universal@12.0/manual/writing-shaders-urp-reconstruct-world-position.html

float2 screenUV = IN.positionCS.xy / _ScaledScreenParams.xy;

// Sample the depth from the Camera depth texture.

#if UNITY_REVERSED_Z

real sceneRawDepth = SampleSceneDepth(screenUV);

#else

// Adjust Z to match NDC for OpenGL ([-1, 1])

real sceneRawDepth = lerp(UNITY_NEAR_CLIP_VALUE, 1, SampleSceneDepth(screenUV));

#endif

// Reconstruct the world space positions.

float3 scenePositionWS = ComputeWorldSpacePosition(screenUV, sceneRawDepth, UNITY_MATRIX_I_VP);

// https://github.com/Unity-Technologies/Graphics/blob/master/Packages/com.unity.render-pipelines.core/ShaderLibrary/Common.hlsl

float3 ComputeWorldSpacePosition(float2 positionNDC, float deviceDepth, float4x4 invViewProjMatrix)

{

float4 positionCS = ComputeClipSpacePosition(positionNDC, deviceDepth);

float4 hpositionWS = mul(invViewProjMatrix, positionCS);

return hpositionWS.xyz / hpositionWS.w;

}

float4 ComputeClipSpacePosition(float2 positionNDC, float deviceDepth)

{

float4 positionCS = float4(positionNDC * 2.0 - 1.0, deviceDepth, 1.0);

#if UNITY_UV_STARTS_AT_TOP

// Our world space, view space, screen space and NDC space are Y-up.

// Our clip space is flipped upside-down due to poor legacy Unity design.

// The flip is baked into the projection matrix, so we only have to flip

// manually when going from CS to NDC and back.

positionCS.y = -positionCS.y;

#endif

return positionCS;

}

ReconstructNormalVS

- https://wickedengine.net/2019/09/22/improved-normal-reconstruction-from-depth/

- https://gist.github.com/bgolus/a07ed65602c009d5e2f753826e8078a0

// 3 tap

const float2 offset_u = float2(0, _CameraDepthTexture_TexelSize.y); // up

const float2 offset_r = float2(_CameraDepthTexture_TexelSize.x, 0); // right

float depth_c = LinearEyeDepth(SampleSceneDepth(IN.uv ), _ZBufferParams); // center

float depth_u = LinearEyeDepth(SampleSceneDepth(IN.uv + offset_u), _ZBufferParams); // up

float depth_r = LinearEyeDepth(SampleSceneDepth(IN.uv + offset_r), _ZBufferParams); // right

float3 diff_h = float3(offset_u, depth_u - depth_c); // horizontal

float3 diff_v = float3(offset_r, depth_r - depth_c); // vertical

float3 normalVS = normalize(cross(diff_h, diff_v));

Ref

- https://www.cyanilux.com/tutorials/depth/

- https://beta.unity3d.com/talks/Siggraph2011_SpecialEffectsWithDepth_WithNotes.pdf

- https://www.reedbeta.com/blog/depth-precision-visualized/

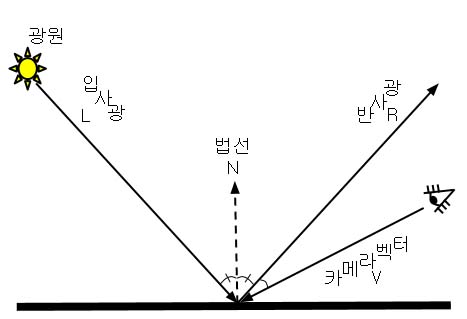

Lighitng Model

- https://www.jordanstevenstechart.com/lighting-models

- 년도를 보면서 발전상황과 왜 쓰는지 왜 안쓰는지 확인필요.

Lighitng Model - NPR

비 물리기반

Lambert - 람버트

- Johann Heinrich Lambert

- 1760 - Photometria

half NdotL = max(0.0, dot(N, L));

half diffuse = NdotL;

Minnaert - 미네르트

-

1954 - Marcel Minnaert

-

달표면 반사를 표현하기 위해 고안됨. moon shader라 불리기도 함

half NdotL = max(0.0, dot(N, L));

half NdotV = max(0.0, dot(N, V));

half diffuse = NdotL * pow(NdotL * NdotV, _MinnaertDarkness);

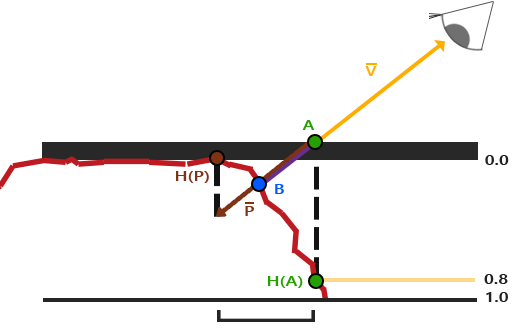

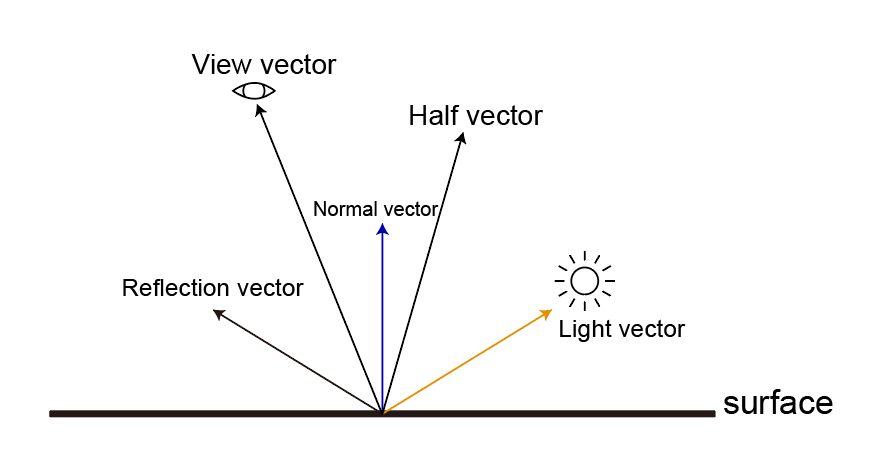

Phong - 퐁

- 1973 - Bui Tuong Phong

half3 R = reflect(-L, N);

half RdotV = max(0.0f, dot(R, V));

half specular = pow(RdotV, _SpecularPower) * _SpecularNormFactor;

Blinn Phong - 블린 퐁

- 1977 - Jim Blinn

half3 H = normalize(V + L);

half NdotH = max(0.0, dot(N, H));

half specular = pow(NdotH ,_SpecularPower) * _SpecularNormFactor;

Strauss - 스트라우스

- 1990 - Paul Strauss

- https://blog.naver.com/sorkelf/401550597481

Gooch - 구치

- 1998 - Gooch

- SIGGRAPH1998 - A Non-Photorealistic Lighting Model For Automatic Technical Illustration

- GDC2008 - Stylization with a Purpose: The Illustrative World of TEAM FORTRESS 2

- 따뜻함과 차가움 영역을 나누어 표시

Half Lambert & Wrapped Lambert - 하프 람버트 & 와프드 람버트

- 2004 Half-Life2 - Valve

- SIGGRAPH2006 - Shading In Valve’s Source Engine

// half lambert

half NdotL = max(0.0, dot(N, L));

half diffuse = pow(NdotL * 0.5 + 0.5, 2);

// wrapped lambert

half diffuse = pow(NdotL * wrapValue + (1.0 - wrapValue), 2);

half diffuse = max(0.0, (NdotL + _wrapped) / (1.0 - _wrapped));

// ref: https://blog.naver.com/eryners/220144182154

// Harf Lambert사용시 명암 차이가 너무 없어져서 무게감이 없어보인다.

half diffuse = pow((dot(N, L) * 0.5) + 0.5, 4) // Half Lambert + Pow

half diffuse = max(0, ((dot(L, N) + warp) / (1 + wrap + wrap^2)) ^ (1 + wrap));

LUT

- Look Up Texture : 룩업텍스쳐

- Ramp Texture라고도 함

- Ramp : 증감. 경사(gradient)

Lake

- 2000 - Lake

- Stylized Rendering Techniques For Scalable Real-Time 3D Animation

- 룩업텍스쳐 사용

- NdotL - LUT Texture(1D)

BARLA

- 2006 - BARLA

- X-Toon: An extended toon shader - Pascal Barla, Joëlle Thollot, Lee Markosian

- 룩업텍스쳐 사용

- NdotL, Detail - LUT Texture(2D)

Lighitng Model - PBR

물리기반

Cook Torrance - 쿡토렌스

- 1982 - Robert L.Cook & Kenneth E. Torrance - A Reflectance Model For Computer Graphics

- 미세면이론

- 거친표면 specular 초점

Ward - 알드

- 1992 - Gregory J. Ward - Measuring and modeling anisotropic reflection

- 경험적 데이터 기반, 거의 사용되지 않음.

Oren-Nayar - 오렌네이어

- 1994 - Michael Oren & Shree K. Nayar - Generalization of Lambert’s Reflectance Model

- 거친포면 diffuse 초점

half NdotL = max(0.0, dot(N, L));

half NdotV = max(0.0, dot(N, V));

half VdotL = max(0.0, dot(V, L));

half s = VdotL - NdotL * NdotV;

half t = lerp(1.0, max(NdotL, NdotV), step(0.0, s));

half3 A = 1.0 + _OrenNayarAlbedo * (_OrenNayarAlbedo / (_OrenNayarSigma + 0.13) + 0.5 / (_OrenNayarSigma + 0.33));

half3 B = 0.45 * _OrenNayarSigma / (_OrenNayarSigma + 0.09);

half3 diffuse = _OrenNayarAlbedo * max(0.0, NdotL) * (A + B * s / t) / 3.14159265;

Modified Phong - 모디파이드 퐁

- Lafortune and Willems (1994)

half norm = (shininess + 2.0) / (2.0 * PI);

half3 R = reflect(-L, N);

half3 VdotR = max(0.0, dot(V, R));

half3 specular = norm * pow(VdotR, shininess);

Ashikhmin Shirley - 어크먼 셜리

- 2000 - Michael Ashikhmin & Peter Shirley - An Anisotropic Phong BRDF Model

- 퐁 스펙큘러

Fakey Oren-Nayar - 최적화 오렌네이어

half OrenNayar_Fakey(half3 N, half3 L, half3 V, half roughness)

{

half LdotN = dot(L, N);

half VdotN = dot(V, N);

half result = saturate(LdotN);

half soft_rim = saturate(1 - VdotN / 2);

const half FAKEY_MAGIC = 0.62;

half fakey = pow(1 - result * soft_rim, 2);

fakey = FAKEY_MAGIC - fakey * FAKEY_MAGIC;

return lerp(result, fakey, roughness);

}

Disney - 디즈니

- SIGGRAPH 2012 - Brent Burley - Physically Based Shading at Disney

- 여러 파라미터

Ref

- https://www.cnblogs.com/timlly/p/10631718.html

- https://www.jordanstevenstechart.com/physically-based-rendering

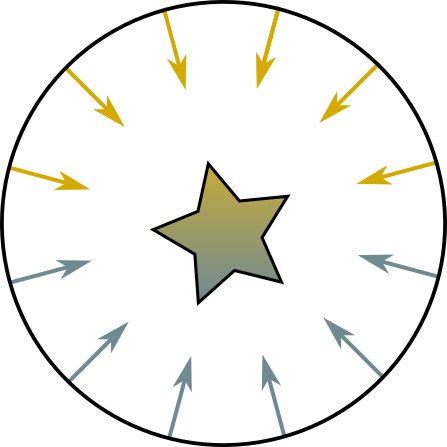

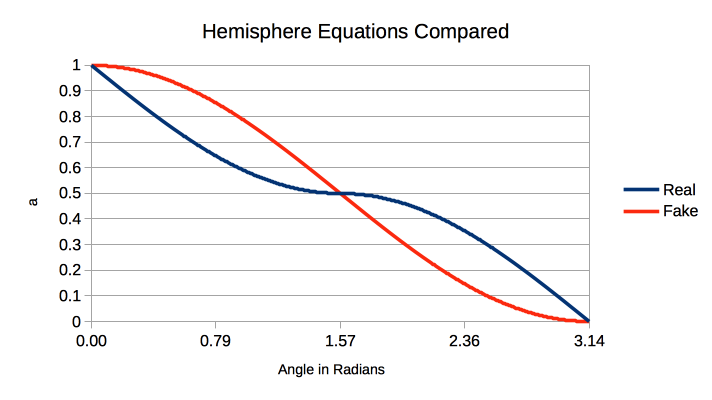

HemisphereLight

if (degree <= 90)

x = 1 - (0.5 * sin(degree));

else

x = 0.5 * sin(degree);

RealColor = x * TopColor + (1 - a) * BottomColor;

=================================================

// 비교적 편차가 적은 간소화 버전으로 변경가능.

x = 0.5 + (0.5 * cos(degree));

// - 분기가 없어졌다.

// - cos(degree)는 dot 연산으로 대처가능

// x = 0.5 + (0.5 * dot(N, L));

FakeColor = x * TopColor + (1 - a) * BottomColor;

half hemiWeight = 0.5 + 0.5 * dot(N, L);

half3 diffuse = lerp(_GroundColor, _SkyColor, hemiWeight);

half3 camPositionWS = GetCurrentViewPosition();

half3 L_VS = GetWorldSpaceViewDir(L);

half skyWeight = 0.5f + 0.5 * max(0, dot(N, normalize(camPositionWS + L_VS)));

half groundWeight = 0.5f + 0.5 * max(0, dot(N, normalize(camPositionWS - L_VS)));

half3 specular = (max(0, pow(skyWeight, _SpecularPower)) + max(0, pow(skyWeight, _SpecularPower)))

*_SpecularNormFactor

* hemiWeight

* diffuse;

half3 result = diffuse + specular;

Ref

- https://github.com/hughsk/glsl-hemisphere-light

- https://emunix.emich.edu/~mevett/GraphicsCourse/Labs/MattsLab5Lighting/index.html#Hemisphere

- https://en.wikibooks.org/wiki/GLSL_Programming/Unity/Diffuse_Reflection_of_Skylight

- Hemisphere Lights - Interactive 3D Graphics

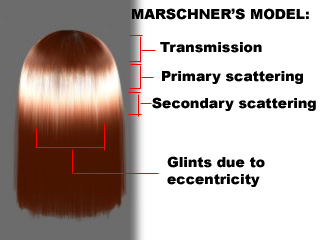

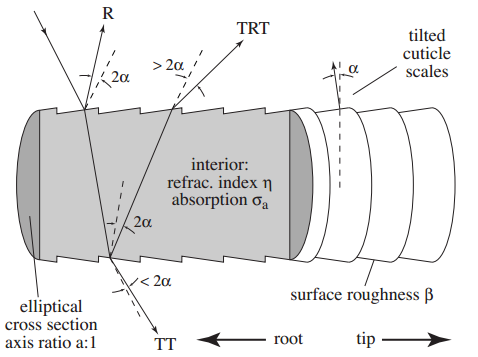

Hair Anisotropic

비등방성(非等方性)(anisotropy)은 방향에 따라 물체의 물리적 성질이 다른 것을 말한다.

예를 들어, 솔질이 된 알루미늄, 섬유, 옷감, 근육 등의 표면은

들어오는 빛의 방향에 따라 반사율이 다른 광학적 비등방성을 띈다.

- https://ko.wikipedia.org/wiki/비등방성

- Kajya-Kay 모델 - SIGGRAPH 1989

- 짧은머리는 괜춘. 빛의 산란효과는 별로

- Steve Marschner 모델 - SIGGRAPH 2003

- 빛의 산란효과 개선(반사/내부산란/투과)

- Scheuermann - Hair Rendering and Shading - GDC 2004

- 【Unite Tokyo 2018】『崩壊3rd』開発者が語るアニメ風レンダリングの極意

- https://graphics.pixar.com/library/DataDrivenHairScattering/

Kajiya-Kay

-

SIGGRAPH 1989

// Sphere

// T | r | 오른쪽

// B | g | 위쪽

// N | b | 직각

// 논문에서 T. 방향은 머리를향한 위쪽 방향.

// half3 T = normalize(IN.T);

// Sphere에서는 B가 위쪽이므로 B로해야 원하는 방향이 나온다.

half3 T = normalize(IN.B);

half sinTH = sqrt(1 - dot(T, H) * dot(T, H));

half specular = pow(sinTH, specularPower);

Marschner

- SIGGRAPH 2003

- blog - Hair Rendering Lighting Model - (Marschner)

- pdf - Light Scattering from Human Hair Fibers - Stephen R. Marschner, Henrik Wann Jensen, Mike Cammarano

- 2020 - Dev Weeks: A3 Still Alive - Technical Art Review

| R | 반사 |

| TRT | 산란 |

| TT | 투과 |

- 2개의 반사를 이용.

- Tangent를 이동 (+ TangentShiftTex)

- 1번째 반사(RR)

- 두번째반사(TRT) (+ SpecularMaskTex)

Scheuermann

- GDC 2004 Hair Rendering and Shading

- Kajiya-Kay랑 Marschner를 믹스함

에니메이션

Ref

- Hair in Tomb Raider

- ShaderX3 Advanced Rendering with DirectX and OpenGL

- 2.14 Hair Rendering and Shading

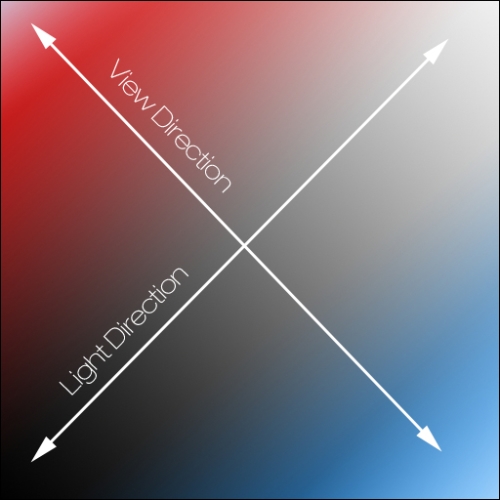

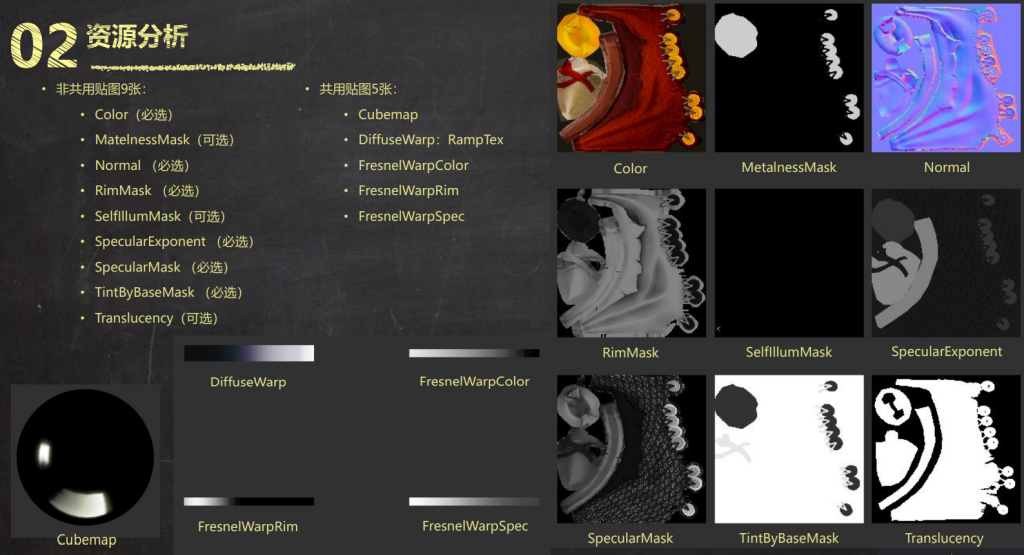

BRDF

- Bidirectional reflectance distribution function

BRDF Texture

- BRDF Fake라고도 함.

half u = dot(L, N) * 0.5 + 0.5;

half v = dot(V, N);

half3 brdfTex = SAMPLE_TEXTURE2D(_BrdfTex, sampler_BrdfTex, half2(u, v)).rgb;

TODO - Ambient BRDF

- Gotanda 2010

| x | dot(V, N) |

| y | Shininess |

TODO - Environment IBL Map

- Schlick’s approximation // fresnel

- Lazarov 2013

| x | dot(V, N) // cosθv |

| y | Roughness |

DFG LUT

| DFG | |

|---|---|

| D | Distrubution |

| F | Fresnel |

| G | Geometry |

예

Color c = diffuse * intensity + fresnelReflectionColor * fresnelTerm + translucentColor * t + Color(0, 0 ,0, specular);

c *= intensity;

half u = dot(L, N) * 0.5 + 0.5;

half v = dot(H, N);

half3 brdfTex = SAMPLE_TEXTURE2D(_BrdfTex, sampler_BrdfTex, half2(u, v)).rgb;

half3 color = albedo * (brdfTex.rgb + gloss * brdfTex.a) * 2;

// +--- B ---+ A : 빛과 마주치는 면

// | | B : 빛과 반대방향의 면

// D C C : 카메라와 마주치는 면

// | | D : 카메라와 90도 되는 면

// +--- A ---+

OffsetU // [-1, 1]

OffsetV // [-1, 1]

half2 brdfUV = float2(saturate(NdotV + OffsetU), saturate((LdotN + 1) * 0.5) + OffsetV);

brdfUV.y = 1 - brdfUV.y;

half3 brdfTex = tex2D(BRDFSampler, brdfUV).rgb;

half3 color = ambient + brdfTex;

Ref

- SIGGRAH 2013 Real Shading in Unreal Engine 4

- https://learnopengl.com/PBR/IBL/Specular-IBL

- SIGGRAH2019 - A Journey Through Implementing Multiscattering BRDFs and Area Lights

- https://teodutra.com/unity/shaders/cook-torrance/lookup-texture/2019/03/28/Lookup-The-Cook-Torrance/

- SIGGRAH2017 - Physically-Based Materials: Where Are We?

- Multi-Textured BRDF-based Lighting - Chris Wynn

- http://www.mentalwarp.com/~brice/brdf.php

- http://wiki.polycount.com/wiki/BDRF_map

- Stoyan Dimitrov: How Space Ape uses Uber Shaders in Unity for Mobile Games

PBR

- PBR(Physical based rendering) / PBS(Physical based shader)

Energy = diffuse + specular + transmission

- https://renderwonk.com/publications/

[Ndc13]Ndc 2013 김동석:UDK로 물리기반 셰이더 만들기

siggraph 2010 tri-Ace Practical Implementation of Physically-Based Shading Models at tri-Ace (Yoshiharu Gotanda) slide cource notes

siggraph 2011 Lazarov Physically Based Lighting in Call of Duty: Black Ops Dimitar Lazarov, Lead Graphics Engineer, Treyarch

Sébastien Lagarde Moving Frostbite to PBR (Sébastien Lagarde & Charles de Rousiers) https://blog.selfshadow.com/publications/s2014-shading-course/frostbite/s2014_pbs_frostbite_slides.pdf

- Etc

- BSDF(Bidirectional Scattering Distribution Function)

- BTDF(Bidirectional Transmission Distribution Function)

- BSSRDF(Bidirectional Scattering Surface Reflectance Distribution Function)

- SPDF(Scattering Probability Density Function)

Custom PBR

TODO

- https://www.slideshare.net/dongminpark71/ndc19-pbr-143928930

- 언차티드4 테크아트 파트4 Special Case Materials - Moss & Wetness & Glass

- 언차티드4 테크아트 파트3 MicroShadowBRDF Term

Ref

- https://www.slideshare.net/MRESC/pbr-vol2-131205432

- Adobe The PBR Guide

https://leegoonz.blog/2020/01/05/energy-conserved-specular-blinn-phong/ https://www.rorydriscoll.com/2009/01/25/energy-conservation-in-games/

재질

- https://dev.epicgames.com/documentation/en-us/unreal-engine/physically-based-materials-in-unreal-engine

- https://creativecloud.adobe.com/learn/substance-3d-designer/web/the-pbr-guide-part-1

- https://creativecloud.adobe.com/learn/substance-3d-designer/web/the-pbr-guide-part-2

custom

난반사(Diffuse Reflection) 정반사(Specluar Reflection)

| 정반사 | 난반사 | |

|---|---|---|

| 비금속 | 흰색 | 기본색 |

| 금속 | 기본색 | 흰색 |

- 방식

- ref

- 물리기반 머터리얼 상식

- 직접광보다 간접광(Environment map)이 중요.

- https://marmoset.co/shop/

- Perpetual License $31900one-time fee (USD)

- https://marmoset.co/shop/

- 직접광보다 간접광(Environment map)이 중요.

- 디퓨즈와 스페큘러

- How To Split Specular And Diffuse In Real Images

- https://lifeisforu.tistory.com/382

- https://www.virial.com/reflection-models.html

- 물리기반 머터리얼 상식

- metal - roughness

- base color : brdf color

- metallic : reflectance ( specular level, Index of Refelection - 별명이 artistic metallic - 1.6)

- roughness: glossiness를 선형화 시켜서 뒤짚은 값.

- 사람은 대략 0.5

- specular / glossiness

- diffuse(albedo (알비도)) / specular / glossiness

- ref

base color/metalic / roughness 방식

- 단점

- 텍셀 밀도가 낮을시 metalic edge 현상 발생

- base color

- PBR Safe Color

- https://helpx.adobe.com/substance-3d-designer/substance-compositing-graphs/nodes-reference-for-substance-compositing-graphs/node -library/material-filters/pbr-utilities/pbr-albedo-safe-color.html

- https://helpx.adobe.com/substance-3d-designer/substance-compositing-graphs/nodes-reference-for-substance-compositing-graphs/node-library/material-filters/pbr-utilities/pbr-basecolor-metallic-validate.html

- PBR Safe Color

- 메탈릭

- albedo : 밝아야함(생각보다 어둡게 나오는 경우가 많음)

- 금속/비금속을 나누는 기준임으로 어중간한 값들의 사용은 자제해야한다.

- 거칠기 : 높을수록 정반사 비율이 낮아짐.

- 기울여 봐야 Fresnel의 차이를 확인 할 수 있음.

FlatShader

- 노말의 앵글을 없에므로 메쉬간 평평한(Flat)효과를 얻을 수 있다.

- 속성을 변경하거나, 런타임에 변경할 수도 있다.

속성을 변경

- https://gamedevelopment.tutsplus.com/articles/go-beyond-retro-pixel-art-with-flat-shaded-3d-in-unity–gamedev-12259

fbx> Normals & Tangents > Normals> Calculate

fbx> Normals & Tangents > Smoothing Angle> 0

런타임

void FlatShading ()

{

MeshFilter mf = GetComponent<MeshFilter>();

Mesh mesh = Instantiate (mf.sharedMesh) as Mesh;

mf.sharedMesh = mesh;

Vector3[] oldVerts = mesh.vertices;

int[] triangles = mesh.triangles;

Vector3[] vertices = new Vector3[triangles.Length];

for (int i = 0; i < triangles.Length; i++)

{

vertices[i] = oldVerts[triangles[i]];

triangles[i] = i;

}

mesh.vertices = vertices;

mesh.triangles = triangles;

mesh.RecalculateNormals();

}

Shader

- 그게 아니면 shader를 이용해도…

- Unity로 실습하는 Shader (5) - Flat Shading

- https://catlikecoding.com/unity/tutorials/advanced-rendering/flat-and-wireframe-shading/

half3 x = ddx(IN.positionWS);

half3 y = ddy(IN.positionWS);

half3 N = normalize(-cross(x, y));

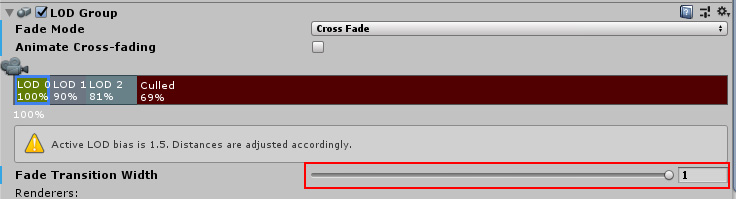

LOD

- LOD : Level Of Detail

- tex2Dlod와 tex2Dbias의 비교연구

| Level of detail (N은 0부터) | ||

|---|---|---|

| tex2Dlod | SAMPLE_TEXTURE2D_LOD | N (밉맵 고정) |

| tex2Dbias | SAMPLE_TEXTURE2D_BIAS | 현재 밉맵 + N |

| QualitySettings.lodBias | LOD가 바뀌는 거리의 비율 조절 | 작을 수록 LOD가 빨리 바뀐다 |

| QualitySettings.maximumLODLevel | 최대 LOD레벨 지정 |

// ref: https://www.unity3dtips.com/unity-fix-blurry-textures-on-mipmap/

UnityEditor.EditorPrefs.SetBool("DeveloperMode", true);

// 인스펙터에 Debug-Internal로 들어가서

// Texture Settings > Mip Bias 부분 설정 가능

밉맵 날카롭게 만들기

- 밉맵 디테일 높이기

- DDS로 밉맵을 따로 제작해서 만들거나

- AssetPostprocessor를 이용해서 처리

1. 밉맵 0으로부터 밉맵 1 생성 (bilinear filter)

2. 밉맵 1에 sharpening filter 적용

3. 2번 결과물로부터 밉맵 2 생성(bilinear filter)

4. 밉맵 2에 sharpening filter 적용

5. 밉맵 끝까지 만들때까지 반복...

AssetPostprocessor

public class MipmapsSharperImporter : AssetPostprocessor

{

void OnPostprocessTexture(Texture2D texture)

{

if (!Path.GetFileNameWithoutExtension(assetPath).EndsWith("_sharppen"))

{

return;

}

if (texture.mipmapCount == 0)

{

return;

}

for (int mipmapLevel = 1; mipmapLevel < texture.mipmapCount; ++mipmapLevel)

{

ApplyBilinearFilter(texture, mipmapLevel);

ApplySharpeningFilter(texture, mipmapLevel);

}

texture.Apply(updateMipmaps: false, makeNoLongerReadable: true);

}

void ApplyBilinearFilter(Texture2D texture, int currMipmapLevel)

{

int currMipmapWidth = texture.width / (1 << currMipmapLevel);

int currMipmapHeight = texture.height / (1 << currMipmapLevel);

Color[] currPixels = new Color[currMipmapWidth * currMipmapHeight];

int prevMipmapLevel = currMipmapLevel - 1;

int prevMipmapWidth = texture.width / (1 << prevMipmapLevel);

Color[] prevPixels = texture.GetPixels(prevMipmapLevel);

for (int y = 0; y < currMipmapHeight; ++y)

{

for (int x = 0; x < currMipmapWidth; ++x)

{

int px = 2 * x;

int py = 2 * y;

Color c00 = prevPixels[(py) * prevMipmapWidth + (px)];

Color c10 = prevPixels[(py) * prevMipmapWidth + (px + 1)];

Color c01 = prevPixels[(py + 1) * prevMipmapWidth + (px)];

Color c11 = prevPixels[(py + 1) * prevMipmapWidth + (px + 1)];

Color b0 = Color.Lerp(c00, c10, 0.5f);

Color b1 = Color.Lerp(c01, c11, 0.5f);

Color final = Color.Lerp(b0, b1, 0.5f);

currPixels[y * currMipmapWidth + x] = final;

}

}

texture.SetPixels(currPixels, currMipmapLevel);

}

private void ApplySharpeningFilter(Texture2D texture, int mipmapLevel)

{

float _Sharpness = 0.1f;

Color[] pixels = texture.GetPixels(mipmapLevel);

int mipmapWidth = texture.width / (1 << mipmapLevel);

int mipmapHeight = texture.height / (1 << mipmapLevel);

const int HALF_RANGE = 1;

for (int y = 0; y < mipmapHeight; ++y)

{

for (int x = 0; x < mipmapWidth; ++x)

{

Color color = pixels[y * mipmapWidth + x];

Color sum = Color.black;

for (int i = -HALF_RANGE; i <= HALF_RANGE; i++)

{

for (int j = -HALF_RANGE; j <= HALF_RANGE; j++)

{

sum += pixels[Mathf.Clamp(y + j, 0, mipmapHeight - 1) * mipmapWidth + Mathf.Clamp(x + i, 0, mipmapWidth - 1)];

}

}

Color sobel8 = color * Mathf.Pow(HALF_RANGE * 2 + 1, 2) - sum;

Color addColor = sobel8 * _Sharpness;

color += addColor;

pixels[y * mipmapWidth + x] = color;

}

}

texture.SetPixels(pixels, mipmapLevel);

}

}

HLOD

- HLOD : Hierarchical Level Of Detail

- Unite2019 HLOD를 활용한 대규모 씬 제작 방법

Ref

- https://docs.unity3d.com/ScriptReference/AssetPostprocessor.OnPostprocessTexture.html

- https://zhuanlan.zhihu.com/p/413834301

- https://community.khronos.org/t/texture-lod-calculation-useful-for-atlasing/61475

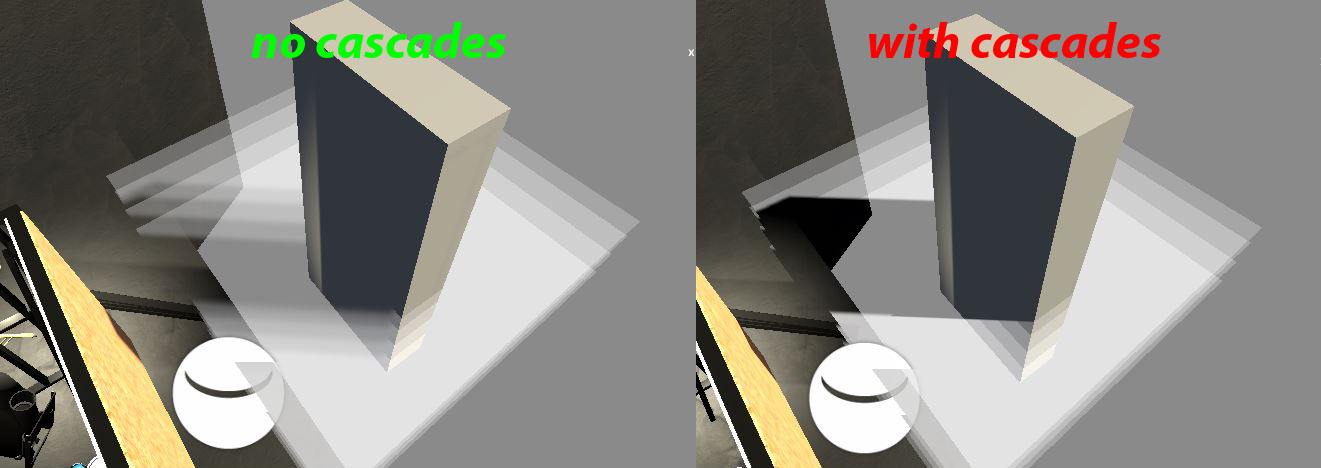

Shadow

- ShadowCaster패스로 그림자를 그려주고

- 메인 패스에서

- shadowCoord를 얻어와

- OUT.shadowCoord = TransformWorldToShadowCoord(OUT.positionWS);로

- 라이트를 얻고

- Light mainLight = GetMainLight(inputData.shadowCoord);

- 그림자를 적용시킨다

- half shadow = mainLight.shadowAttenuation;

- finalColor.rgb *= shadow;

- shadowCoord를 얻어와

// Light & Shadow

#pragma multi_compile _ _MAIN_LIGHT_SHADOWS

#pragma multi_compile _ _MAIN_LIGHT_SHADOWS_CASCADE

#pragma multi_compile _ _ADDITIONAL_LIGHTS

#pragma multi_compile _ _ADDITIONAL_LIGHTS_CASCADE

#pragma multi_compile _ _SHADOWS_SOFT

UnityEngine.Rendering.Universal.ShaderKeywordStrings

// com.unity.render-pipelines.universal/ShaderLibrary/Shadows.hlsl

float4 TransformWorldToShadowCoord(float3 positionWS)

{

#ifdef _MAIN_LIGHT_SHADOWS_CASCADE

half cascadeIndex = ComputeCascadeIndex(positionWS);

#else

half cascadeIndex = half(0.0);

#endif

float4 shadowCoord = mul(_MainLightWorldToShadow[cascadeIndex], float4(positionWS, 1.0));

return float4(shadowCoord.xyz, 0);

}

OUT.shadowCoord = TransformWorldToShadowCoord(positionWS);// float4

VertexPositionInputs vertexInput = GetVertexPositionInputs(v.vertex.xyz);

OUT.shadowCoord = GetShadowCoord(vertexInput);

Light mainLight = GetMainLight(inputData.shadowCoord);

half shadow = mainLight.shadowAttenuation;

finalColor.rgb *= shadow;

아 유니티 병신같은 문서어딧

// Toggle the alpha test

#define _ALPHATEST_ON

// Toggle fog on transparent

#define _ENABLE_FOG_ON_TRANSPARENT

UsePass "Universal Render Pipeline/Lit/ShadowCaster"

com.unity.render-pipelines.universal/Shaders/ShadowCasterPass.hlsl

// You can also optionally disable shadow receiving for transparent to improve performance. To do so, disable Transparent Receive Shadows in the Forward Renderer asset

_MAIN_LIGHT_SHADOWS _MAIN_LIGHT_SHADOWS_CASCADE _MAIN_LIGHT_SHADOWS_SCREEN ?? => MAIN_LIGHT_CALCULATE_SHADOWS

_MAIN_LIGHT_SHADOWS_CASCADE => REQUIRES_VERTEX_SHADOW_COORD_INTERPOLATOR

_ADDITIONAL_LIGHT_SHADOWS => ADDITIONAL_LIGHT_CALCULATE_SHADOWS

// cascade

// https://forum.unity.com/threads/what-does-shadows_screen-mean.568225/

// https://forum.unity.com/threads/water-shader-graph-transparency-and-shadows-universal-render-pipeline-order.748142/

PipelineAsset> Shadows > Cascades> No Cascades

// com.unity.render-pipelines.universal/ShaderLibrary/Shadows.hlsl

#if !defined(_RECEIVE_SHADOWS_OFF)

#if defined(_MAIN_LIGHT_SHADOWS) || defined(_MAIN_LIGHT_SHADOWS_CASCADE) || defined(_MAIN_LIGHT_SHADOWS_SCREEN)

#define MAIN_LIGHT_CALCULATE_SHADOWS

#if !defined(_MAIN_LIGHT_SHADOWS_CASCADE)

#define REQUIRES_VERTEX_SHADOW_COORD_INTERPOLATOR

#endif

#endif

#if defined(_ADDITIONAL_LIGHT_SHADOWS)

#define ADDITIONAL_LIGHT_CALCULATE_SHADOWS

#endif

#endif

TEXTURE2D_SHADOW(_MainLightShadowmapTexture);

SAMPLER_CMP(sampler_MainLightShadowmapTexture);

half4 _MainLightShadowParams; // (x: shadowStrength, y: 1.0 if soft shadows, 0.0 otherwise, z: main light fade scale, w: main light fade bias)

float4 _MainLightShadowmapSize; // (xy: 1/width and 1/height, zw: width and height)

struct ShadowSamplingData

{

half4 shadowOffset0;

half4 shadowOffset1;

half4 shadowOffset2;

half4 shadowOffset3;

float4 shadowmapSize;

};

// ShadowParams

// x: ShadowStrength

// y: 1.0 if shadow is soft, 0.0 otherwise

half4 GetMainLightShadowParams()

{

return _MainLightShadowParams;

}

half MainLightRealtimeShadow(float4 shadowCoord)

ShadowSamplingData shadowSamplingData = GetMainLightShadowSamplingData();

half4 shadowParams = GetMainLightShadowParams();

return SampleShadowmap(TEXTURE2D_ARGS(_MainLightShadowmapTexture, sampler_MainLightShadowmapTexture), shadowCoord, shadowSamplingData, shadowParams, false);

half AdditionalLightRealtimeShadow(int lightIndex, float3 positionWS, half3 lightDirection)

real SampleShadowmap(TEXTURE2D_SHADOW_PARAM(ShadowMap, sampler_ShadowMap), float4 shadowCoord, ShadowSamplingData samplingData, half4 shadowParams, bool isPerspectiveProjection = true) _SHADOWS_SOFT

PipelineAsset> Shadows > Cascades> Soft Shadows

_SHADOWS_SOFT : real SampleShadowmapFiltered(TEXTURE2D_SHADOW_PARAM(ShadowMap, sampler_ShadowMap), float4 shadowCoord, ShadowSamplingData samplingData)

float4 TransformWorldToShadowCoord(float3 positionWS) : _MAIN_LIGHT_SHADOWS_CASCADE

_MAIN_LIGHT_SHADOWS_CASCADE : half ComputeCascadeIndex(float3 positionWS)

float3 ApplyShadowBias(float3 positionWS, float3 normalWS, float3 lightDirection)

LerpWhiteTo

#pragma multi_compile_fog

OUT.fogCoord = ComputeFogFactor(OUT.positonHCS.z);

half3 ambient = SampleSH(IN.N);

finalColor.rgb *= ambient;

finalColor.rgb = MixFog(finalColor.rgb, IN.fogCoord);

ShadowAttenuation

// URP

half4 shadowCoord = TransformWorldToShadowCoord(positionWS);

// or

// VertexPositionInputs vertexInput = GetVertexPositionInputs(IN.positionOS.xyz);

// half4 shadowCoord = GetShadowCoord(vertexInput);

half shadowAttenuation = MainLightRealtimeShadow(shadowCoord);

// or

// ShadowSamplingData shadowSamplingData = GetMainLightShadowSamplingData();

// half4 shadowParams = GetMainLightShadowParams();

// half shadowAttenuation = SampleShadowmap(TEXTURE2D_ARGS(_MainLightShadowmapTexture, sampler_MainLightShadowmapTexture), shadowCoord, shadowSamplingData, shadowParams, false);

// or

// Light mainLight = GetMainLight(i.shadowCoord);

// half shadowAttenuation = mainLight.shadowAttenuation;

ShadowCaster

// 그림자 그려주는놈

Pass

{

Tags{"LightMode" = "ShadowCaster"}

}

vert()

{

OUT.positionCS = TransformWorldToHClip(ApplyShadowBias(positionWS, normalWS, lightDirectionWS));

}

frag()

{

1 : lit

0 : shadow

return 1 or 0;

}

Pass

{

Name "ShadowCaster"

Tags

{

"LightMode" = "ShadowCaster"

}

ZWrite On

Cull Back

HLSLPROGRAM

#pragma target 3.5

#pragma vertex shadowVert

#pragma fragment shadowFrag

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Common.hlsl" // real

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/CommonMaterial.hlsl" // LerpWhiteTo

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Shadows.hlsl" // ApplyShadowBias

struct Attributes

{

float4 positionOS : POSITION;

float4 normal : NORMAL;

};

struct Varyings

{

float4 positionHCS : SV_POSITION;

};

Varyings shadowVert(Attributes IN)

{

Varyings OUT = (Varyings)0;

float3 positionWS = TransformObjectToWorld(IN.positionOS.xyz);

float3 normalWS = TransformObjectToWorldNormal(IN.normal.xyz);

OUT.positionHCS = TransformWorldToHClip(ApplyShadowBias(positionWS, normalWS, _MainLightPosition.xyz));

return OUT;

}

half4 shadowFrag(Varyings IN) : SV_Target

{

return 0;

}

ENDHLSL

}

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Common.hlsl" // real

#if defined(SHADER_API_MOBILE) || defined(SHADER_API_SWITCH)

#define HAS_HALF 1

#else

#define HAS_HALF 0

#endif

#if REAL_IS_HALF

#define real half

#define real2 half2

#define real3 half3

#define real4 half4

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Shadows.hlsl" // ApplyShadowBias

float3 ApplyShadowBias(float3 positionWS, float3 normalWS, float3 lightDirection)

real SampleShadowmap(TEXTURE2D_SHADOW_PARAM(ShadowMap, sampler_ShadowMap), float4 shadowCoord, ShadowSamplingData samplingData, half4 shadowParams, bool isPerspectiveProjection = true)

// 안쓰는 놈인데.. LerpWhiteTo를 들고있다..

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/CommonMaterial.hlsl" // LerpWhiteTo

real LerpWhiteTo(real b, real t)

쉐도우맵

-

Z-depth구하기

-

씬 렌더링

-

Z-depth를 깊이버퍼에 저장한다(depth map)

world > View[Light] > Proj[Light] Light's View Matrix > Light's Projection Matrix > transform NDC > transform texture Space

-

-

그림자그리기

-

씬 렌더링

-

깊이버퍼랑 Z-depth 테스트

if (fragment Z-depth > sampled Z-depth) { shadow : 0 } else { lit : 1 }

-

-

SSSM(Screen Space Shadow Map)

-

Unity Shader - Custom SSSM(Screen Space Shadow Map) 自定义屏幕空间阴影图

Shadow Acne

DepthOnly

Pass

{

Tags

{

"LightMode" = "DepthOnly"

}

ZWrite On

ColorMask 0

HLSLPROGRAM

...

half4 shadowFrag(Varyings IN) : SV_Target

{

return 0;

}

ENDHLSL

}

Meta

- 라이트맵 구울때 사용.

- 디버깅용 내부툴 만들때 유용.

Pass

{

Tags

{

"LightMode" = "DepthOnly"

}

...

}

Ref

- URP 셰이더 코딩 튜토리얼 : 제 1편 - Unlit Soft Shadow

- URP Default Unlit Based to Custom Lighting

- urp管线的自学hlsl之路 第十篇 主光源阴影投射和接收

- builtin - Rendering 7 Shadows

- [Unity] URP Custom Shadow Shader 도전하기 : Frame Debugger로 원인 찾기(1/3)

- Reading a depth value from Unity’s shadow map?

Dithering

- Dithering : 이미지에 Noise를 입히는 행위

- 이미지의 디테일을 향상 시킬 수 있음(ex 계단현상(Banding) 완화)

- jitter : 흐트러짐

Ref

- https://www.ronja-tutorials.com/post/042-dithering/

- GDC2016 - Low Complexity, High Fidelity: The Rendering of INSIDE

Gemoetry

- VS > HS > TS >

GS> FS - shader model 4.0

- https://roystan.net/articles/grass-shader.html

- https://halisavakis.com/my-take-on-shaders-geometry-shaders/

Type

[maxvertexcount(NumVerts)]

void ShaderName ( PrimitiveType DataType Name [ NumElements ], inout StreamOutputObject )

{

}

| PrimitiveType | Num | |

|---|---|---|

| point | 1 | Point list |

| line | 2 | Line list or line strip |

| triangle | 3 | Triangle list or triangle strip |

| lineadj | 4 | Line list with adjacency or line strip with adjacency |

| triangleadj | 6 | Triangle list with adjacency or triangle strip with adjacency |

| StreamOutputObject | |

|---|---|

| PointStream<T> | A sequence of point primitives |

| LineStream<T> | A sequence of line primitives |

| TriangleStream<T> | A sequence of triangle primitives |

Barebone

#pragma vertex vert

#pragma fragment frag

#pragma geometry geom

struct FromVS

{

float4 positionOS : POSITION

}

struct VStoGS

{

float4 positionOS : SV_POSITION

}

struct GStoFS

{

float4 positionCS : SV_POSITION

}

VStoGS vert(FromVS IN)

{

}

[maxvertexcount(3)] // 최대 얼마나 많이 vertex를 추가할 것인가.

void geom(triangle float4 IN[3] : SV_POSITION, uint pid : SV_PrimitiveID, inout TriangleStream<GStoFS> STREAM)

void geom(triangle VStoGS IN[3], uint pid : SV_PrimitiveID, inout TriangleStream<GStoFS> STREAM)

{

GStoFS OUT1;

GStoFS OUT2;

GStoFS OUT3;

STREAM.Append(OUT1);

STREAM.Append(OUT2);

STREAM.Append(OUT3);

// https://docs.microsoft.com/en-us/windows/win32/direct3dhlsl/dx-graphics-hlsl-so-restartstrip

// Ends the current primitive strip and starts a new strip

STREAM.RestartStrip();

}

half4 frag(GStoFS IN) : SV_Target

{

}

Etc

- https://medium.com/@andresgomezjr89/rain-snow-with-geometry-shaders-in-unity-83a757b767c1

- https://jayjingyuliu.wordpress.com/2018/01/24/unity3d-wireframe-shader/

Lightmap

Ref

LPV

- Light Propagation Volumes

Ref

- https://ericpolman.com/2016/06/28/light-propagation-volumes/

- https://blog.naver.com/catllage/221830338176

Noise

Ref

Ray

| Ray Casting | ray를 쏘고 맞춘놈을 찾음 |

| Ray Marching | ray를 쏘고 맞춘놈을 찾음. 찾기위해 기하학적 교차 테스트(ex SDF(Signed Distance Function))을 이용 |

| Ray Tracing | ray를 쏘고 맞춘놈을 찾음. 거기서 편향되게(reflect/refract) 레이를 쏨. |

| Path Tracing | ray를 쏘고 맞춘놈을 찾음. 거기서 고르게(GI) 레이를 쏨. |

Ray Marching

- http://jamie-wong.com/2016/07/15/ray-marching-signed-distance-functions/#signed-distance-functions

- https://iquilezles.org/www/index.htm

- http://blog.three-eyed-games.com/2018/05/03/gpu-ray-tracing-in-unity-part-1/

- http://blog.three-eyed-games.com/2018/05/12/gpu-path-tracing-in-unity-part-2/

- Coding Adventure: Ray Marching

- The Art of Code - Ray Marching playlist

- Peer Play - Raymarching Shader Tutorial playlist

- https://rito15.github.io/posts/ray-marching/

Ref

SDF

- SDf : Signed Distance Field

예

- 폰트 렌더링

- LOD를 위한 알파맵

- Ray Marching

Ref

- SIGGRAPH2015 - Dynamic Occlusion with Signed Distance Fields

- NVScene 2015 Session: How to Create Content with Signed Distance Functions (Johann Korndörfer)

- https://iquilezles.org/www/index.htm

SRP Overview

Sobel Filter

Bloom(with DualFilter)

Light Streak

Screen Space Ambient Occlusion

Screen Space Global Illumination

Light Shaft

FXAA

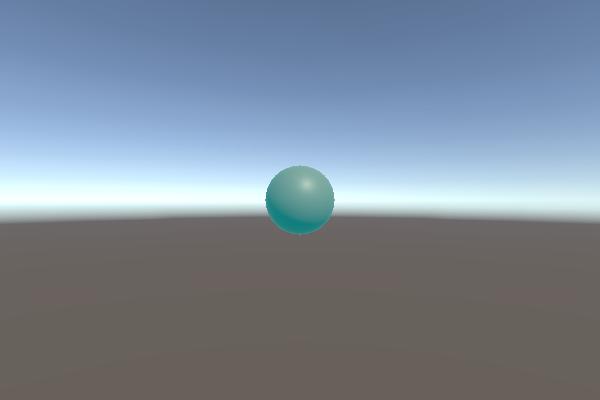

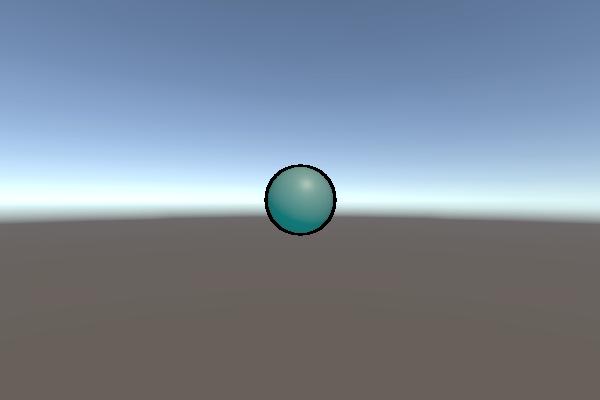

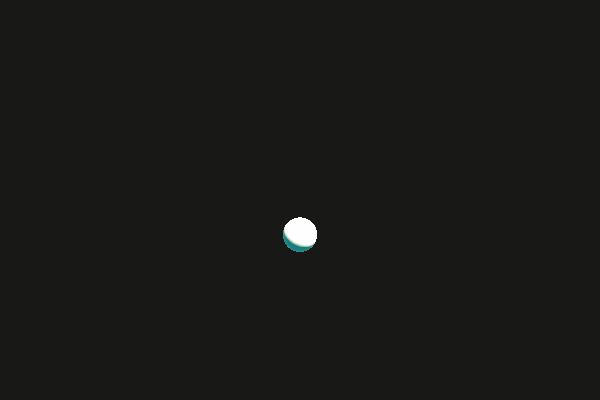

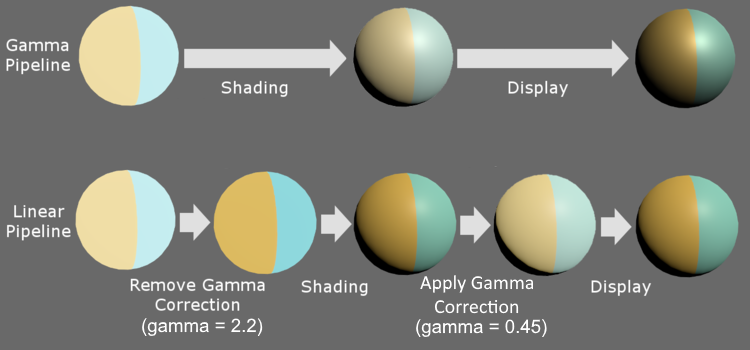

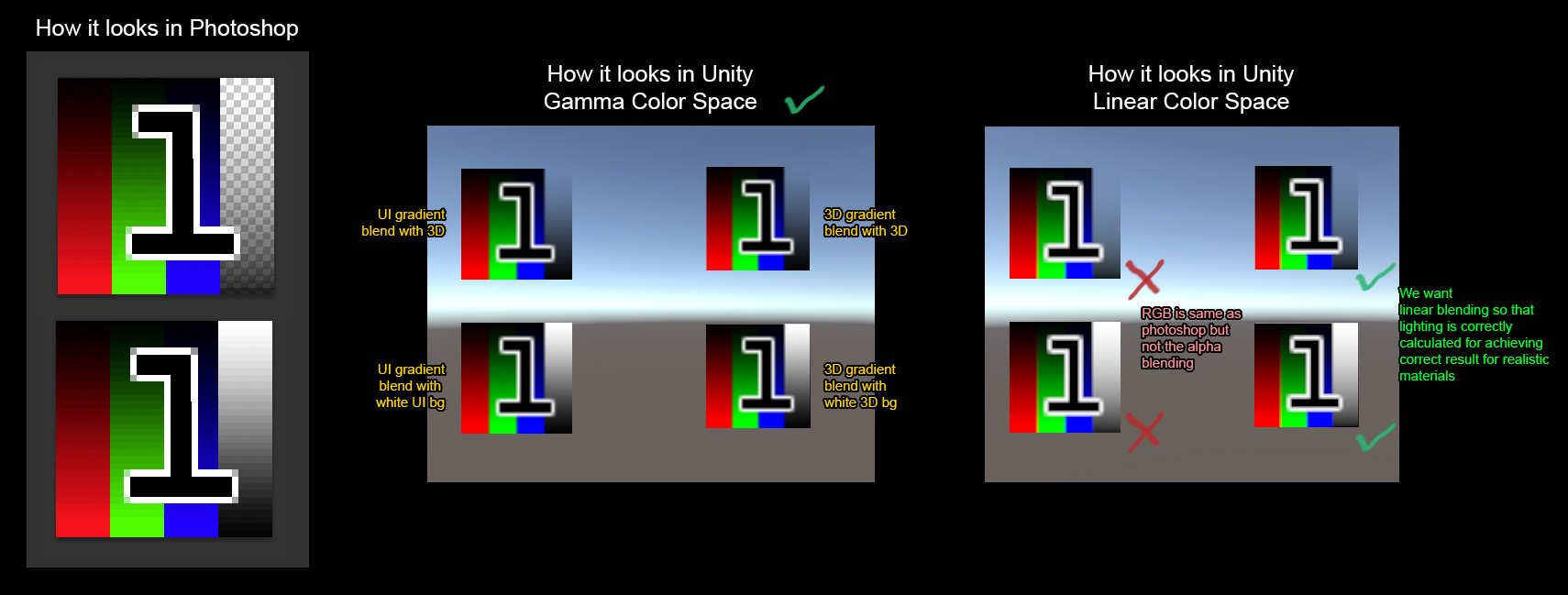

Linear / Gamma

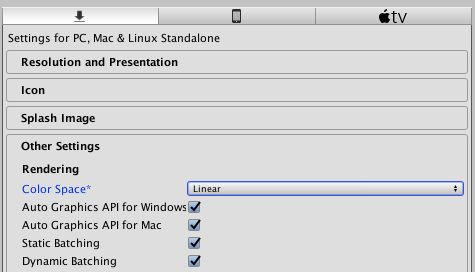

Unity에는 Gamma와 Linear를 선택할 수 있는 Color Space항목이 있다.

Edit> Project Settings> Player> Other Settings> Rendering> Color Space

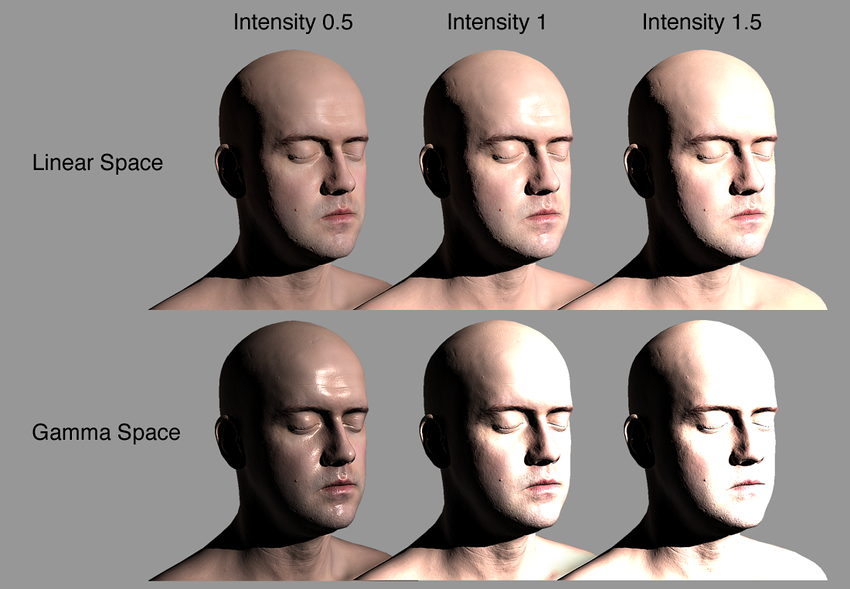

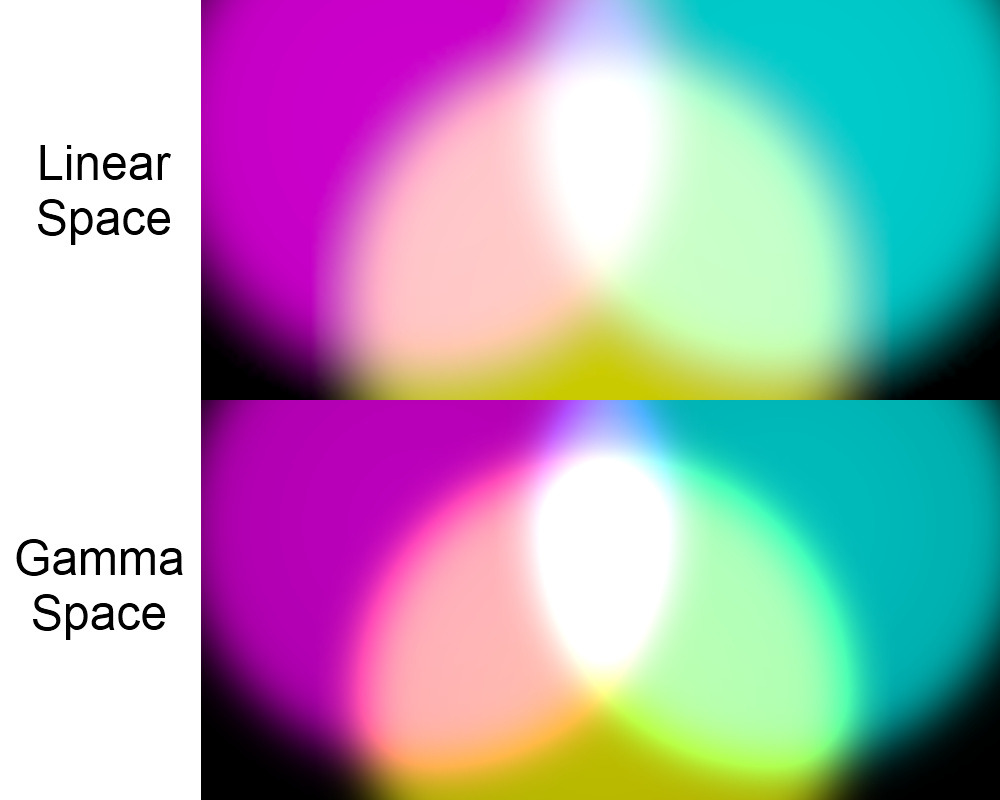

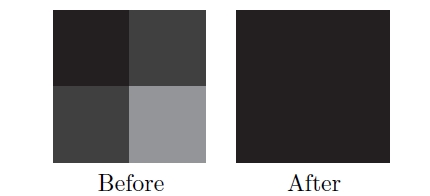

Gamma / Linear Color Space 결과물 차이

일단 차이부터 알아보자.

- 감마 색 공간에서의 블렌딩은 결과적으로 채도와 밝기가 과도하게 높습니다

이러한 조명 강도, 블렌딩 차이는 왜 생기는 것일까?

Gamma와 Linear의 관계

같은 RGB값이라도 Linear와 Gamma상태에서 보여지는 색이 다르다.

- 감마 보정(wiki: Gamma correction)

Gamma Encode/Decode

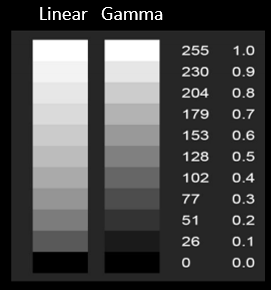

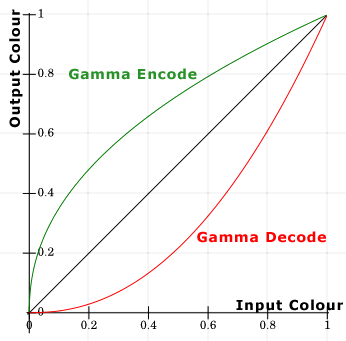

Linear는 무엇이고 Gamma는 무엇인가?

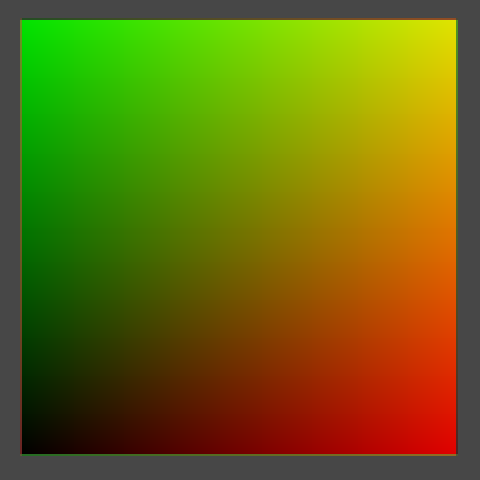

| 선 | Gamma | Gamma Value | 공간 |

|---|---|---|---|

| 초록-위 | encode | pow(x, 0.45) (0.45 == 1/2.2) | |

| 검정-가운데 | - | pow(x, 1.0 ) | Linear |

| 빨강-아래 | decode | pow(x, 2.2 ) | Gamma / sRGB / CRT |

- wiki: sRGB : standard RGB color space.

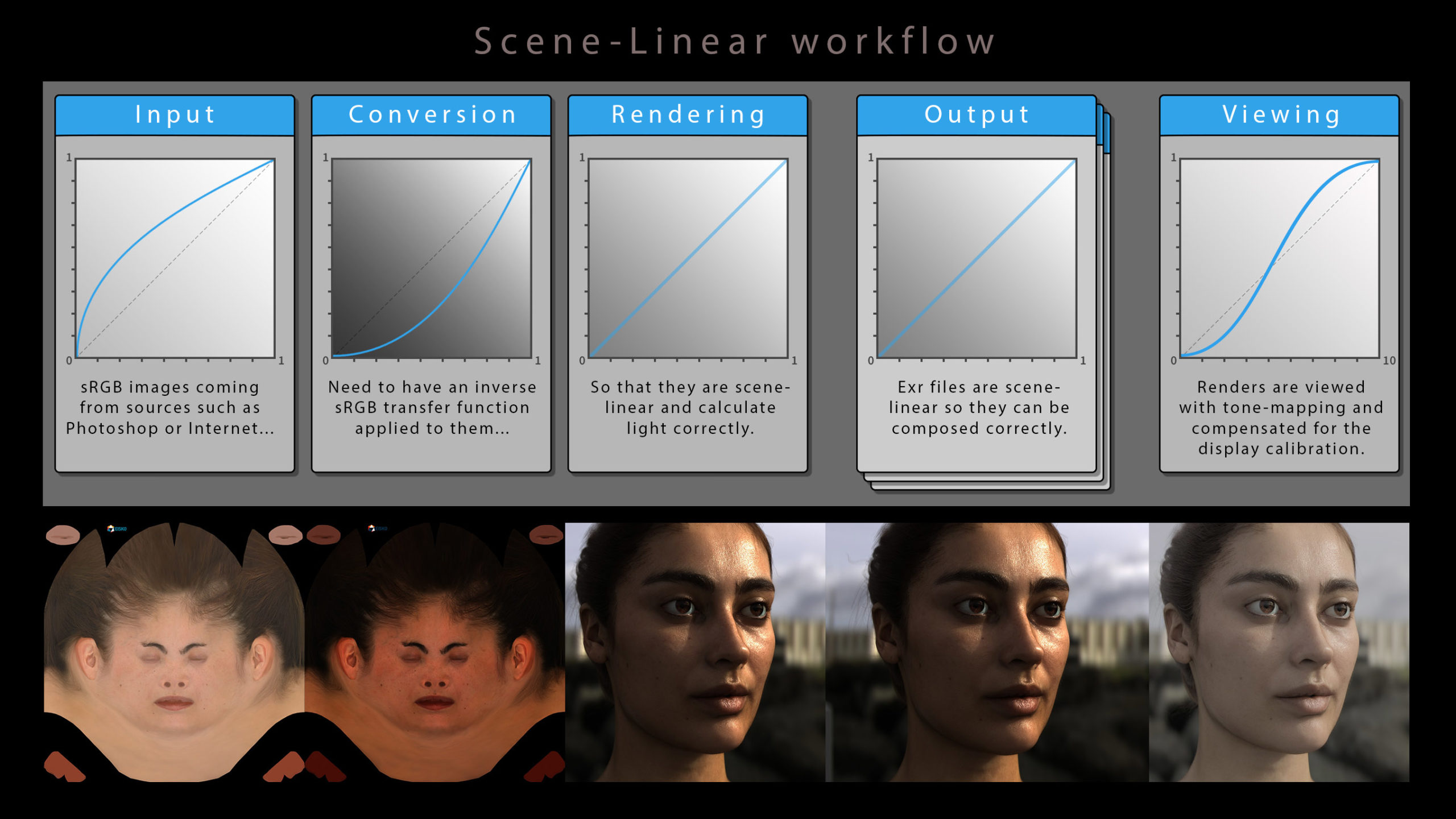

Gamma / Linear Color Space 작업 환경

Linear와 Gamma가 왜 작업 결과물에 영향을 주는가?

- Gamma Pipeline에서는 빛의 연산 결과가 Linear환경에서 연산되고 모니터에는 Gamam가 적용된 상태로 표시된다.

- 빛의 연산 결과도 Linear환경으로 표시하려면, 모니터에 Gamma가 적용되어 어두워지기전에, 미리 밝게해두면 Linear한 빛의 연산 결과를 모니터에서 확인할 수 있게 된다.

이미지 제작 환경(감마 보정 환경)

| 환경 | Gamma correction | 설명 |

|---|---|---|

| 포토샵 | 편집시 decode(2.2) / 저장시 (1) | 포토샵 기본셋팅시: 편집(모니터 Gamma환경) / 저장(모니터 Gamma환경이 아닌 원래 그대로) |

| 셰이더 | 1 | 셰이더 계산은 Linear 환경이다 |

| 모니터 | decode(2.2) |

Rendering - Gamma Color Space

| 연산 | pow(0.5, x) | 값 |

|---|---|---|

| encode | pow(0.5, 0.45) | 0.7 (0.7320428479728127) |

| - | pow(0.5, 1) | 0.5 |

| decode | pow(0.5, 2.2) | 0.2 (0.217637640824031) |

| 환경 | 연산 | 텍스쳐 | 셰이딩 | |

|---|---|---|---|---|

| 모니터(포토샵) | decode | 0.2 | ||

| 저장 | encode | 0.5 | 포토샵 컬러 이미지 파일 | |

| 셰이더(모델) | - | 0.5 | 0.5 | 이미지가 밝아진 상태에서 연산 |

| 모니터(게임) | decode | 0.2 | 0.2 |

- 문제점

- 광원 감쇠

- 감마 파이프라인에서는 셰이더 연산이 어둡게 보임.(셰이딩 값 참조)

- 광원 강도 반응

- 광원의 강도에 따라 선형적이 아닌 비 선형적으로 밝아지거나 어두워진다.

- 블렌딩

- 채도와 밝기가 과도하게 높아질 수 있음.

- 광원 감쇠

Rendering - Linear Color Space

- Gamma Correction

- Gamma를 1.0으로 하는게 Gamma Correction이라고 하는 인터넷 문서들이 있는데, 그렇게 이해하면 안됨.

- Wiki에는 Gamma Correction 자체가 Gamma 연산을 하는 걸로 정의되어 있음.

- 게임에서는 출력장치로 출력하기 좋게 Gamma를 보정하는 작업을 Gamma Correction이라 칭하는게 좀 더 게임개발에 알맞음.

- 모니터로 출력시 어둡게 출력되는데, 출력 전에 밝게 후보정하는 작업.

sRGB 보정

- sRGB 체크시 RGB채널에 대한 Gamma Decode을 수행시(단, A채널은 그대로).

- alpha에 대해선 체크 여부에 상관없이 decode적용 안함.

- 남는 alpha채널에 Mask맵 같은걸 찡겨 넣을 수 있음.

- 다만, 게임에서의 리니어 알파가 포토샵같이 비선형의 알파가 다름으로써 UI 알파블렌딩에서 문제가 됨.

- UI의 알파처리는 따로 처리해줘야 함.

| 환경 | 연산 | 텍스쳐 | 셰이딩 | |

|---|---|---|---|---|

| 모니터(포토샵) | decode | 0.2 | ||

| 저장 | encode | 0.5 | 포토샵 컬러 이미지 파일 | |

| sRGB옵션 | decode | 0.2 | sRGB Check시 (Gamma decode적용) | |

| 셰이더(모델) | - | 0.2 | 0.5 | 이미지가 작업 환경과 동일한 환경에서 연산 |

| 셰이더(포스트프로세스) | encode | 0.5 | 0.7 | 디스플레이에 보여주기 전에 최종 후처리 |

| 모니터(게임) | decode | 0.2 | 0.5 |

sRGB 미보정

- 컬러 텍스쳐를 sRGB 체크를 하지 않으면, 색이 떠보이게됨.

- ORM

- Normal 텍스쳐는 수치 그 자체이므로 sRGB옵션 자체가 없음.

- Roughness/Occlusion는 sRGB 체크를 해지해야함.

- 기타 수치 텍스쳐

- flowmap 등등…

| 환경 | 연산 | 텍스쳐 | 셰이딩 | |

|---|---|---|---|---|

| 저장 | encode | 0.5 | 이미지 파일 | |

| 셰이더(모델) | - | 0.5 | 0.5 | |

| 셰이더(포스트프로세스) | encode | 0.7 | 0.7 | 디스플레이에 보여주기 전에 최종 후처리 |

| 모니터(게임) | decode | 0.5 | 0.5 |

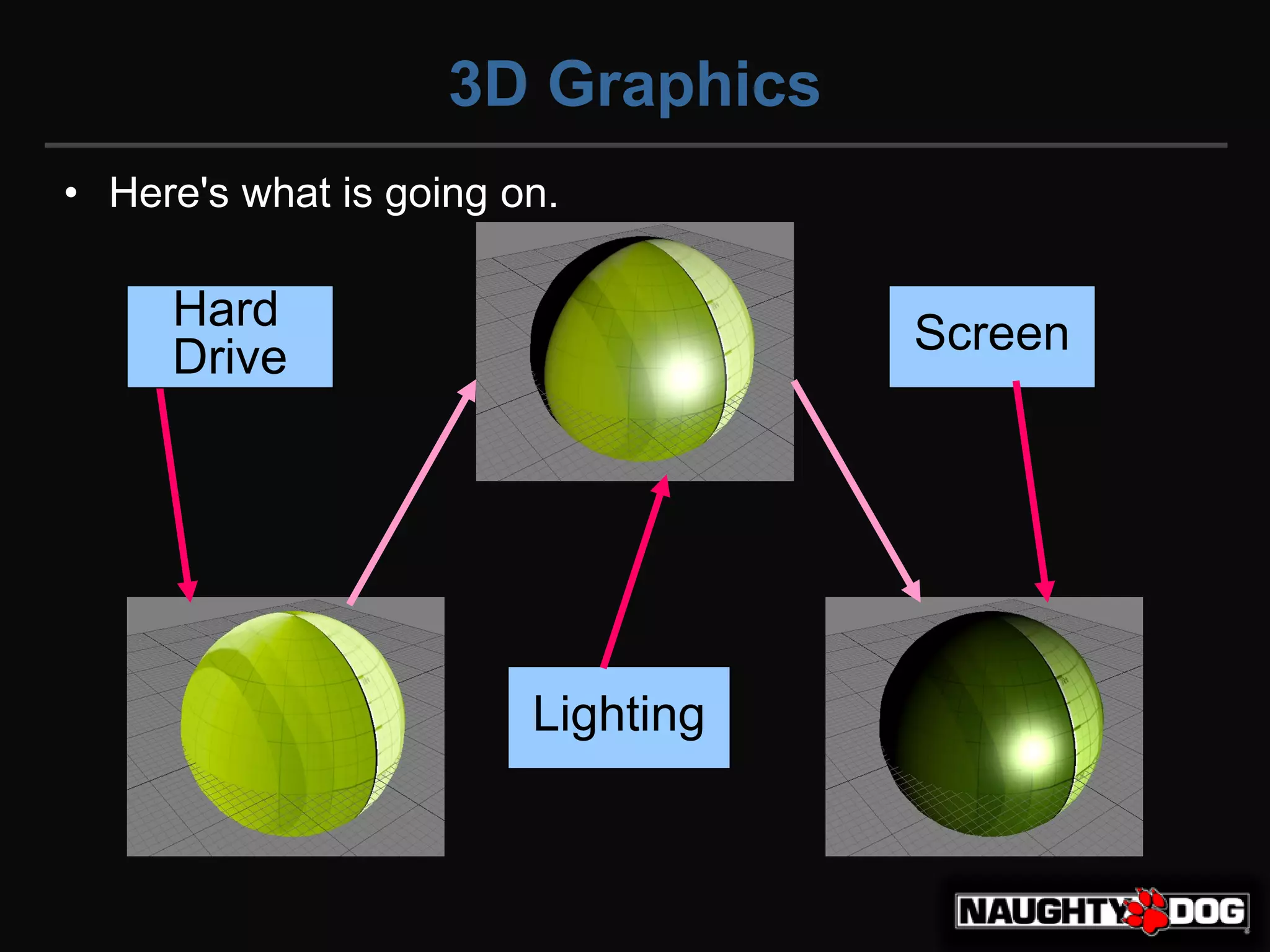

종합

| step | 환경 | 텍스쳐 | 셰이딩 |

|---|---|---|---|

| 모니터(포토샵) | 0.2 | ||

| Hard Drive | 저장 | 0.5 | |

| Lighting | 셰이더(모델) | 0.5 | 0.5 |

| Screen | 모니터(게임) | 0.2 | 0.2 |

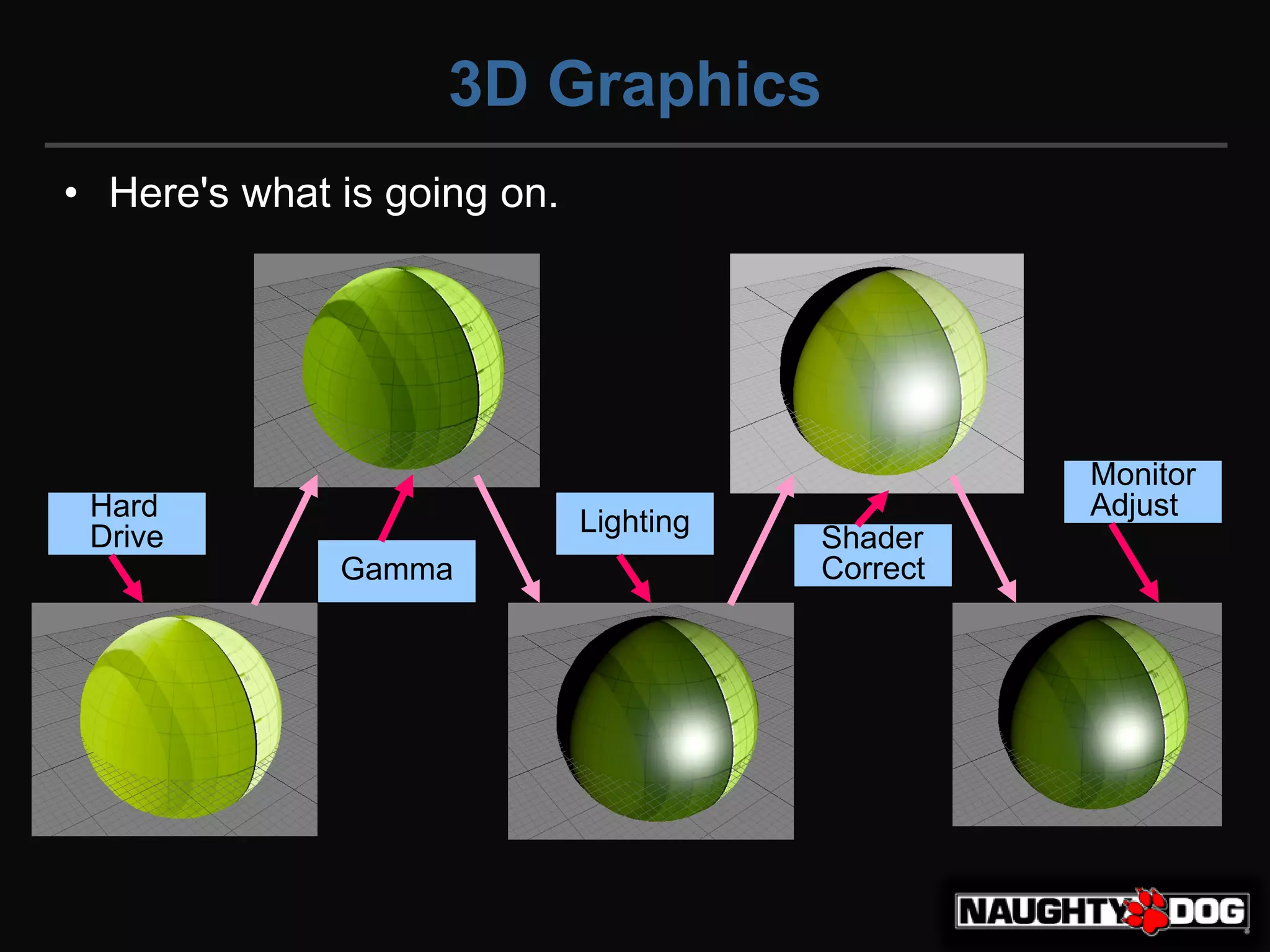

| Step | 환경 | 텍스쳐 | 셰이딩 |

|---|---|---|---|

| 모니터(포토샵) | 0.2 | ||

| Hard Drive | 저장 | 0.5 | |

| Gamma | sRGB옵션 | 0.2 | |

| Lighting | 셰이더(모델) | 0.2 | 0.5 |

| Shader Correct | 셰이더(포스트프로세스) | 0.5 | 0.7 |

| Monitor Adjust | 모니터(게임) | 0.2 | 0.5 |

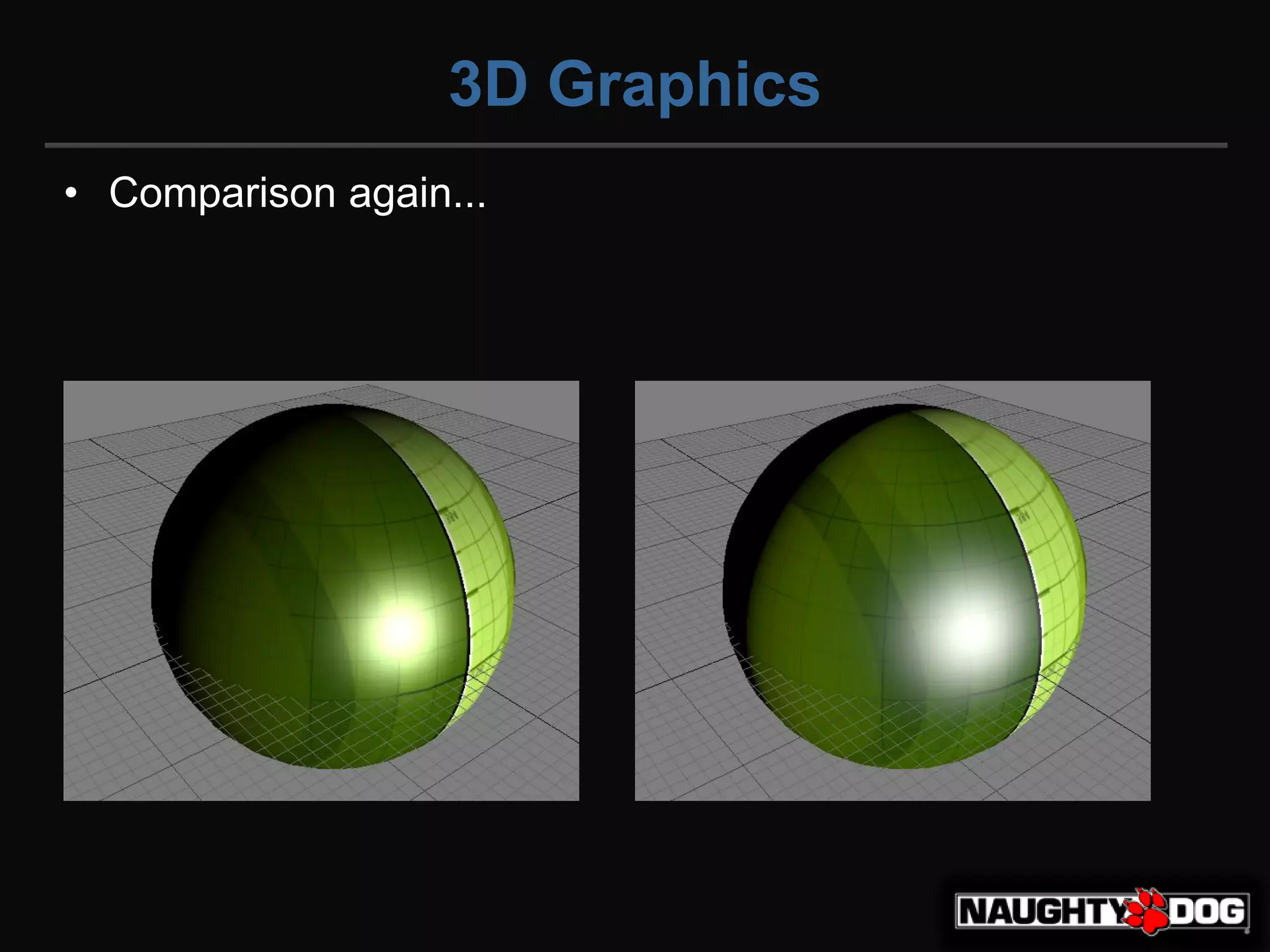

좌 감마 // 우 리니어

Linear Color Space에서 작업시 주의할 점

- 플렛폼 지원

- sRGB로 보정이 필요한 텍스쳐 구분

- UI 텍스쳐의 Alpha값

플렛폼 지원

-

OpenGL ES 2.0 이하는 Gamma만 지원.

-

Linear를 위한 모바일 최소 사양

| platform | version | API |

|---|---|---|

| Android | Android 4.3 / API level 18 / Jelly Bean | OpenGL ES 3.0 / Vulkan |

| iOS | 8.0 | Metal |

sRGB로 보정이 필요한 텍스쳐 구분

- 데이터를 그대로 다루는것은 Linear로

- 나머지 Albedo / Emmission는 sRGB 체크로 Gamma Decode 하도록

| Image | sRGB 체크 | |

|---|---|---|

| Albedo | O | Gamma Decode 적용 |

| Albedo + Smoothness(alpha) | O | sRGB는 RGB값에만 적용. Alpha는 미적용. |

| DataTexture | X | 데이터 그대로 사용 |

| NormalMap | 옵션없음 | 데이터 그대로 사용 |

UI 텍스쳐의 Alpha값

- Linear환경으로 보다 풍부한 표현력을 얻었지만, UI색상의 알파블랜딩이 제대로 되지 않는 현상이 있다.

- Linear개념으로 보면 정확한 계산이지만, 포토샵 작업자 관점에서는 아니다.

- sRGB옵션은 RGB에만 영향을 줌으로, Alpha를 처리함에 있어 추가 작업을 해 주어야 한다.

몇가지 방법이 있다

- 포토샵 강제 설정하거나…

- UI카메라와 SRP의 활용하거나..

Photoshop 설정

- 처음부터 Linear로 저장시켜버리자

- 포토샵 Color Settings > Advanced > Blend RPG Colors Using Gamma: 1.00

- 작업비용

- 디자이너들은 작업하기 불편…

- 프로그래머의 추가 작업 불필요.

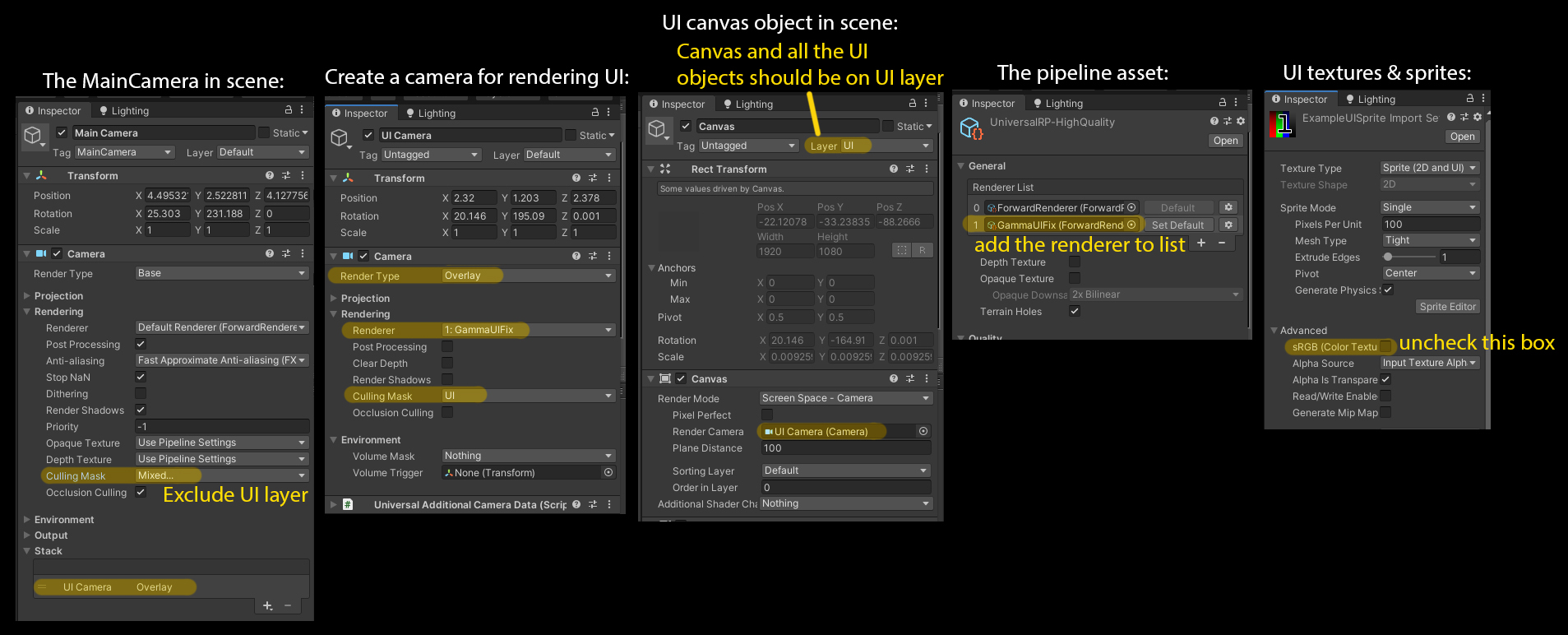

UI카메라 + SRP

- UI카메라를 따로 두어서 UI Alpha에 미리 감마를 적용시켜주자.

- 그리고 Game카메라와 잘 섞어주자.

- UITexture sRPG해제

- sRGB상태 데이터 그대로 쓰고 Alpha만 어떻게 잘 처리할 것이다.

- Main Camera

- Camera> Rendering> Culling Mask> Uncheck UI

- UI Camera

- Camera> Render Type> OverLay

- Camera> Rendering> Renderer> GameUIFix

- Camera> Rendering> Culling Mask> UI

- UI Canvas

- Canvas> Render Camera> UI Camera

- PipelineAsset 설정

- _CameraColorTexture를 활용: Quality> Anti Aliasing (MSAA)> 2x 이상

- RenderFeature 작성

- Game 카메라(Linear공간)를 Gamma 공간으로 변환

- 변환된 Game카메라의 출력결과 + UI카메라 출력결과

- 합친 결과(Gamma Space)를 Linear Space로 변경시켜주기

- 새로운 Renderer 추가와 작성한 Feature추가

- General> Renderer List> Add Last GammaUIFix

// _CameraColorTexture 활성화는 PipelineAsset> Quality> Anti Aliasing (MSAA)> 2x 이상으로 하면 됨.

// 1. DrawUIIntoRTPass

// cmd.SetRenderTarget(UIRenderTargetID);

// cmd.ClearRenderTarget(clearDepth: true, clearColor: true, Color.clear);

// 2. BlitPass

// cmd.Blit(DrawUIIntoRTPass.UIRenderTargetID, _colorHandle, _material);

float4 uiColor = SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, i.uv);

uiColor.a = LinearToGamma22(uiColor.a);

float4 mainColor = SAMPLE_TEXTURE2D(_CameraColorTexture, sampler_CameraColorTexture, i.uv);

mainColor.rgb = LinearToGamma22(mainColor.rgb);

float4 finalColor;

finalColor.rgb = lerp(mainColor.rgb, uiColor.rgb, uiColor.a);

finalColor.rgb = Gamma22ToLinear(finalColor.rgb);

finalColor.a = 1;

Ref

- [GDC2010] GDCValue: Uncharted-2-HDR

- slideshare: Lighting Shading by John Hable

- 정종필 linear/gamma 설명

- 정종필님의 설명 볼때 주의점

- 텍스쳐와 빛의 연산을 뚜렸히 분리하여 설명하지 않고, 리니어라는 것을 강조하기 위해 그래프를 옆에두고 설명함

- 처음에는 이미지가 다른 걸 보고 아 그렇구나 해서 이해한것으로 착각하기 쉬운데, 텍스쳐와 빛의 연산을 분리하지 않고 설명해서 나중에 더 햇갈릴 수 있음.

- Uncharted-2-HDR를 확인 할것.

- 정종필 - Gamma Color space와 Linear Color space란?

- 정종필 - 라이팅과 셰이더에서 연산을 위한 선형 파이프라인

- 정종필 - 유니티 셰이더에서 sRGB/Linear 사용 및 응용

- 정종필님의 설명 볼때 주의점

- GPU Gems 3 - Chapter 24. The Importance of Being Linear

- Article - Gamma and Linear Spaces

- [데브루키] Color space gamma correction

- 선형(Linear) 렌더링에서의 UI 작업할때 요령

- 201205 Unity Linear color space에서 UI의 alpha 값이 바뀌는 문제에 대하여..

- 3D scene need Linear but UI need Gamma

- https://nbertoa.wordpress.com/2016/06/20/gamma-correction/

- https://chrisbrejon.com/cg-cinematography/chapter-1-color-management/

셰이더 모델과 플렛폼 관계

Shader Model

- https://docs.unity3d.com/Manual/SL-ShaderCompileTargets.html

- Metal

- geometry 지원 여부(일단 5.0까지 지원 안함)

- compute에서 GetDimensions 지원 안함

- es3.1

- 4 compute buffer만 보장함.

| model | desc |

|---|---|

| 2.5 | derivatives |

| 3.0 | 2.5 + interpolators10 + samplelod + fragcoord |

| 3.5 | 3.0 + interpolators15 + mrt4 + integers + 2darray + instancing |

| 4.0 | 3.5 + geometry |

| 4.5 | 3.5 + compute + randomwrite |